| Title: | Utilities for Scoring and Assessing Predictions |

| Version: | 2.1.2 |

| Language: | en-GB |

| Description: | Facilitate the evaluation of forecasts in a convenient framework based on data.table. It allows user to to check their forecasts and diagnose issues, to visualise forecasts and missing data, to transform data before scoring, to handle missing forecasts, to aggregate scores, and to visualise the results of the evaluation. The package mostly focuses on the evaluation of probabilistic forecasts and allows evaluating several different forecast types and input formats. Find more information about the package in the Vignettes as well as in the accompanying paper, <doi:10.48550/arXiv.2205.07090>. |

| License: | MIT + file LICENSE |

| Encoding: | UTF-8 |

| LazyData: | true |

| Imports: | checkmate, cli, data.table (≥ 1.16.0), ggplot2 (≥ 3.4.0), methods, Metrics, purrr, scoringRules (≥ 1.1.3), stats |

| Suggests: | ggdist, kableExtra, knitr, magrittr, rmarkdown, testthat (≥ 3.1.9), vdiffr |

| Config/Needs/website: | r-lib/pkgdown, amirmasoudabdol/preferably |

| Config/testthat/edition: | 3 |

| RoxygenNote: | 7.3.2 |

| URL: | https://doi.org/10.48550/arXiv.2205.07090, https://epiforecasts.io/scoringutils/, https://github.com/epiforecasts/scoringutils |

| BugReports: | https://github.com/epiforecasts/scoringutils/issues |

| VignetteBuilder: | knitr |

| Depends: | R (≥ 4.1) |

| NeedsCompilation: | no |

| Packaged: | 2025-08-25 07:24:53 UTC; nikos |

| Author: | Nikos Bosse |

| Maintainer: | Nikos Bosse <nikosbosse@gmail.com> |

| Repository: | CRAN |

| Date/Publication: | 2025-08-25 08:10:02 UTC |

scoringutils: Utilities for Scoring and Assessing Predictions

Description

Facilitate the evaluation of forecasts in a convenient framework based on data.table. It allows user to to check their forecasts and diagnose issues, to visualise forecasts and missing data, to transform data before scoring, to handle missing forecasts, to aggregate scores, and to visualise the results of the evaluation. The package mostly focuses on the evaluation of probabilistic forecasts and allows evaluating several different forecast types and input formats. Find more information about the package in the Vignettes as well as in the accompanying paper, doi:10.48550/arXiv.2205.07090.

Author(s)

Maintainer: Nikos Bosse nikosbosse@gmail.com (ORCID)

Authors:

Sam Abbott contact@samabbott.co.uk (ORCID)

Hugo Gruson hugo.gruson+R@normalesup.org (ORCID)

Sebastian Funk sebastian.funk@lshtm.ac.uk

Other contributors:

Johannes Bracher johannes.bracher@kit.edu (ORCID) [contributor]

Toshiaki Asakura toshiaki.asa9ra@gmail.com (ORCID) [contributor]

James Mba Azam james.azam@lshtm.ac.uk (ORCID) [contributor]

Michael Chirico michaelchirico4@gmail.com (ORCID) [contributor]

See Also

Useful links:

Report bugs at https://github.com/epiforecasts/scoringutils/issues

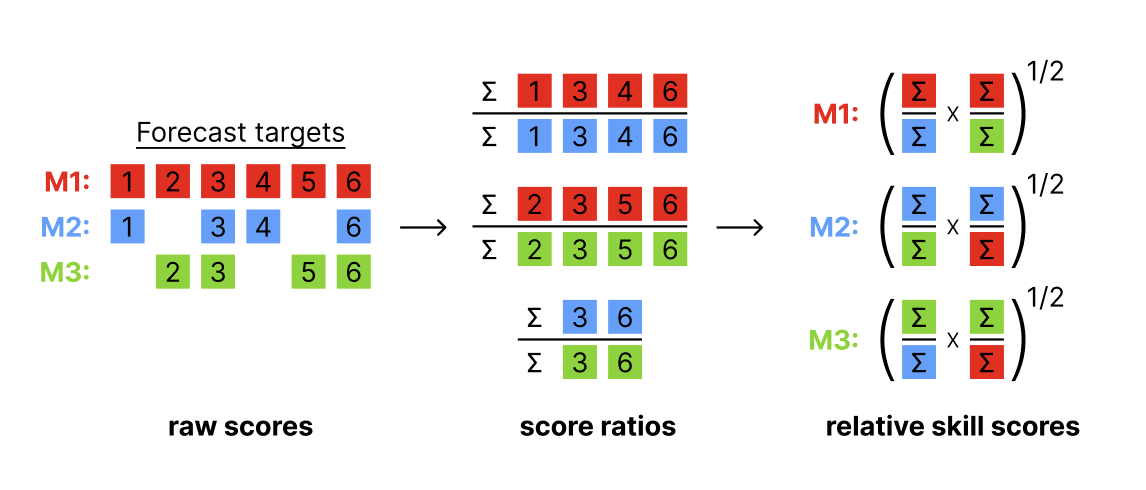

Add relative skill scores based on pairwise comparisons

Description

Adds a columns with relative skills computed by running

pairwise comparisons on the scores.

For more information on

the computation of relative skill, see get_pairwise_comparisons().

Relative skill will be calculated for the aggregation level specified in

by.

Usage

add_relative_skill(

scores,

compare = "model",

by = NULL,

metric = intersect(c("wis", "crps", "brier_score"), names(scores)),

baseline = NULL,

...

)

Arguments

scores |

An object of class |

compare |

Character vector with a single colum name that defines the elements for the pairwise comparison. For example, if this is set to "model" (the default), then elements of the "model" column will be compared. |

by |

Character vector with column names that define further grouping

levels for the pairwise comparisons. By default this is |

metric |

A string with the name of the metric for which a relative skill shall be computed. By default this is either "crps", "wis" or "brier_score" if any of these are available. |

baseline |

A string with the name of a model. If a baseline is

given, then a scaled relative skill with respect to the baseline will be

returned. By default ( |

... |

Additional arguments for the comparison between two models. See

|

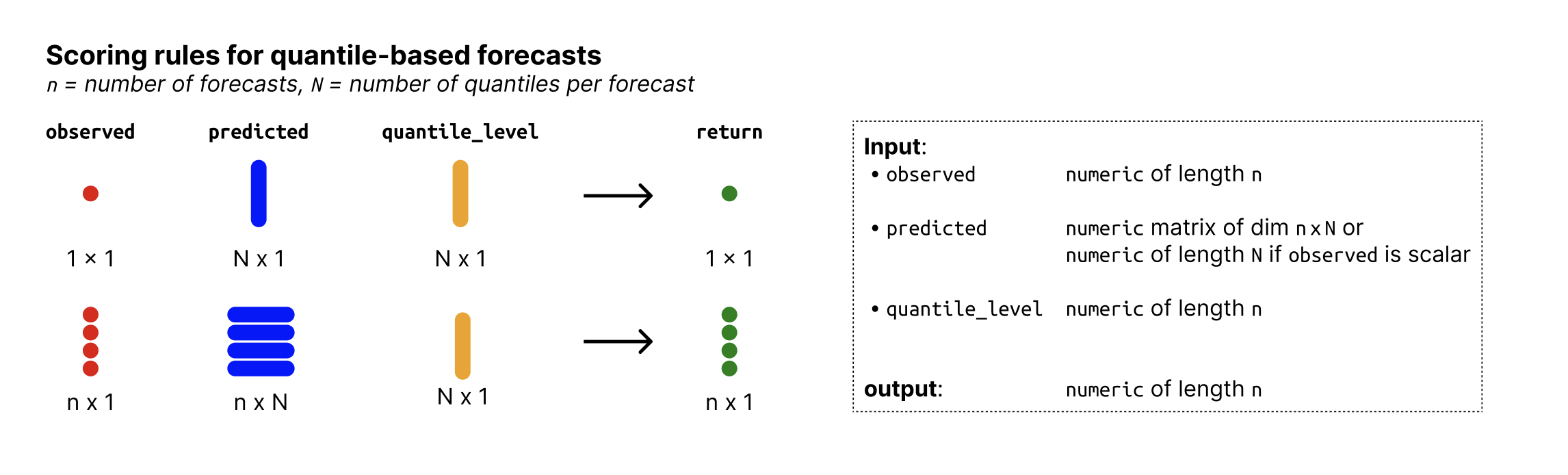

Absolute error of the median (quantile-based version)

Description

Compute the absolute error of the median calculated as

|\text{observed} - \text{median prediction}|

The median prediction is the predicted value for which quantile_level == 0.5.

The function requires 0.5 to be among the quantile levels in quantile_level.

Usage

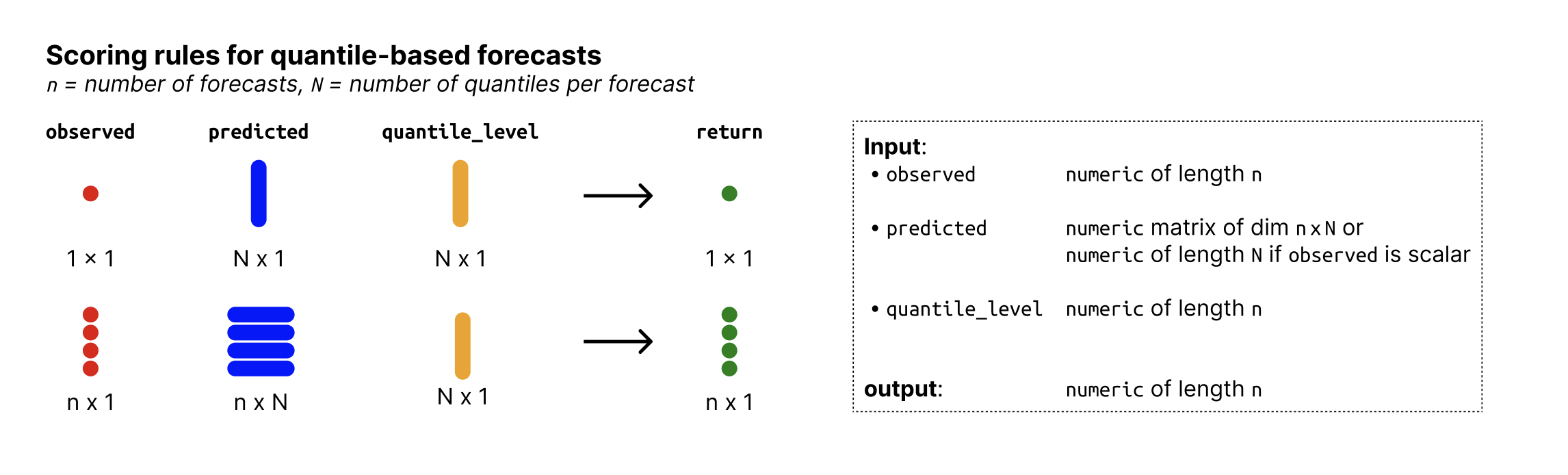

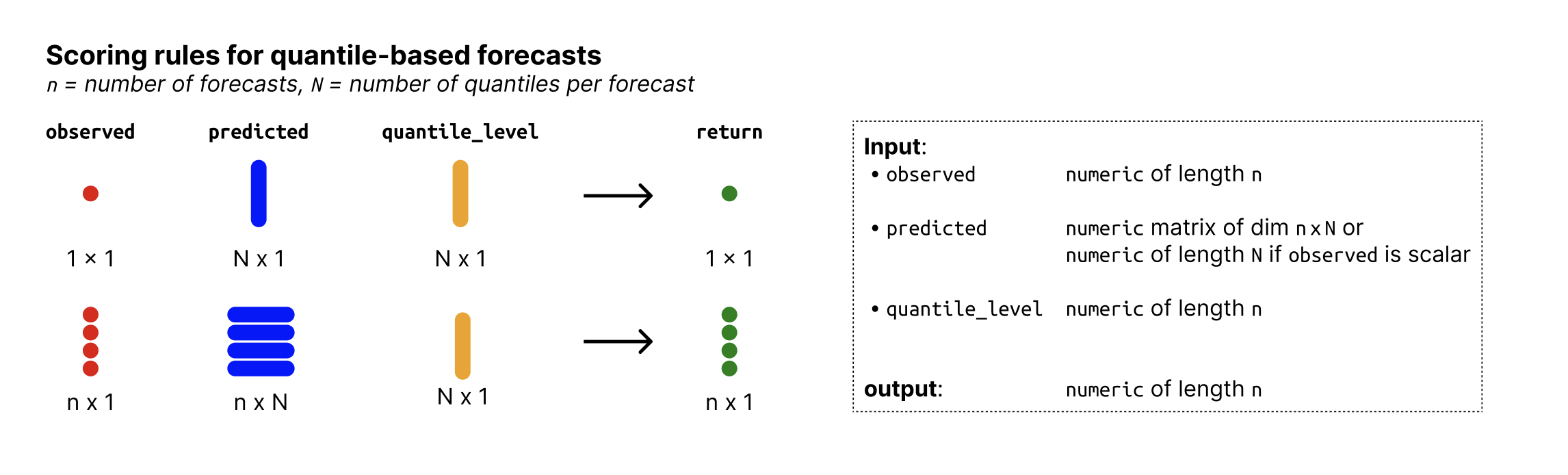

ae_median_quantile(observed, predicted, quantile_level)

Arguments

observed |

Numeric vector of size n with the observed values. |

predicted |

Numeric nxN matrix of predictive

quantiles, n (number of rows) being the number of forecasts (corresponding

to the number of observed values) and N

(number of columns) the number of quantiles per forecast.

If |

quantile_level |

Vector of of size N with the quantile levels for which predictions were made. |

Value

Numeric vector of length N with the absolute error of the median.

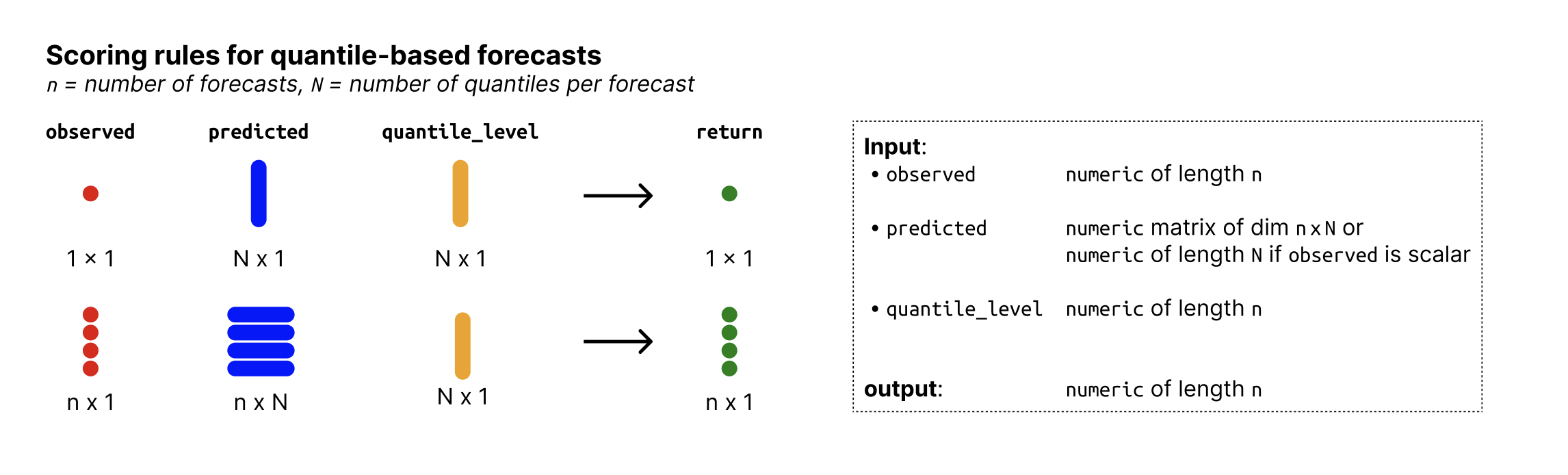

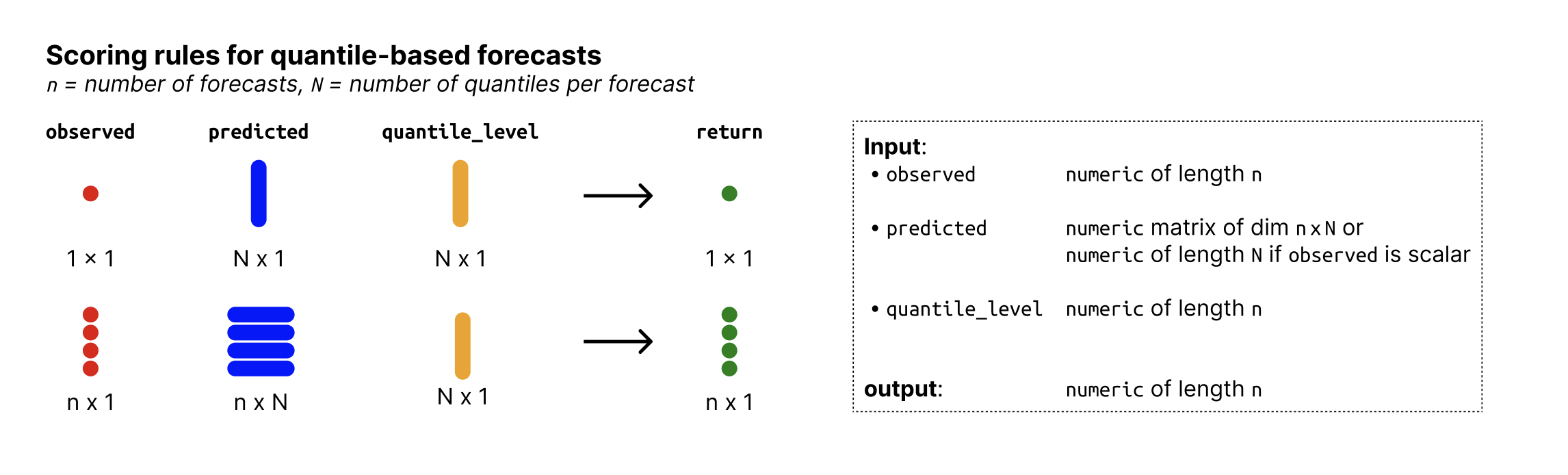

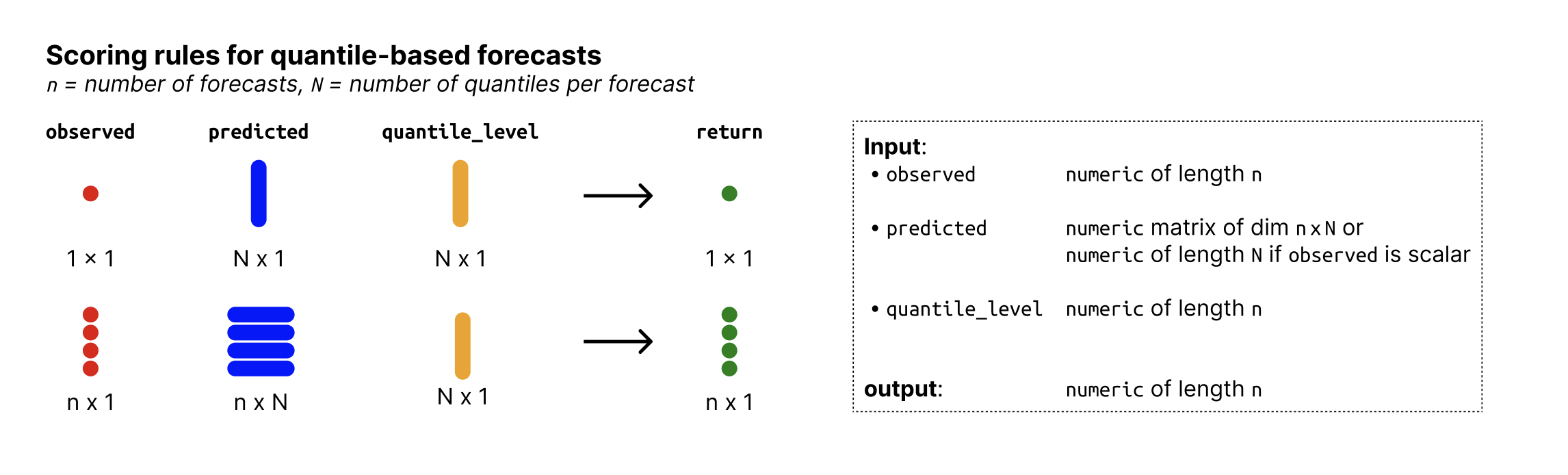

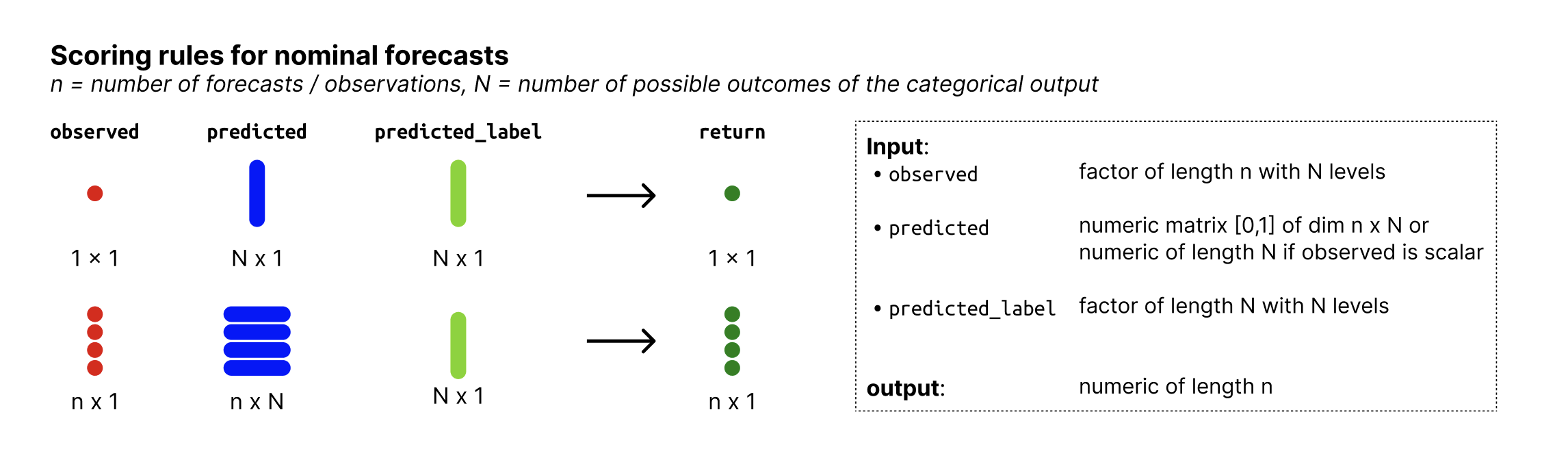

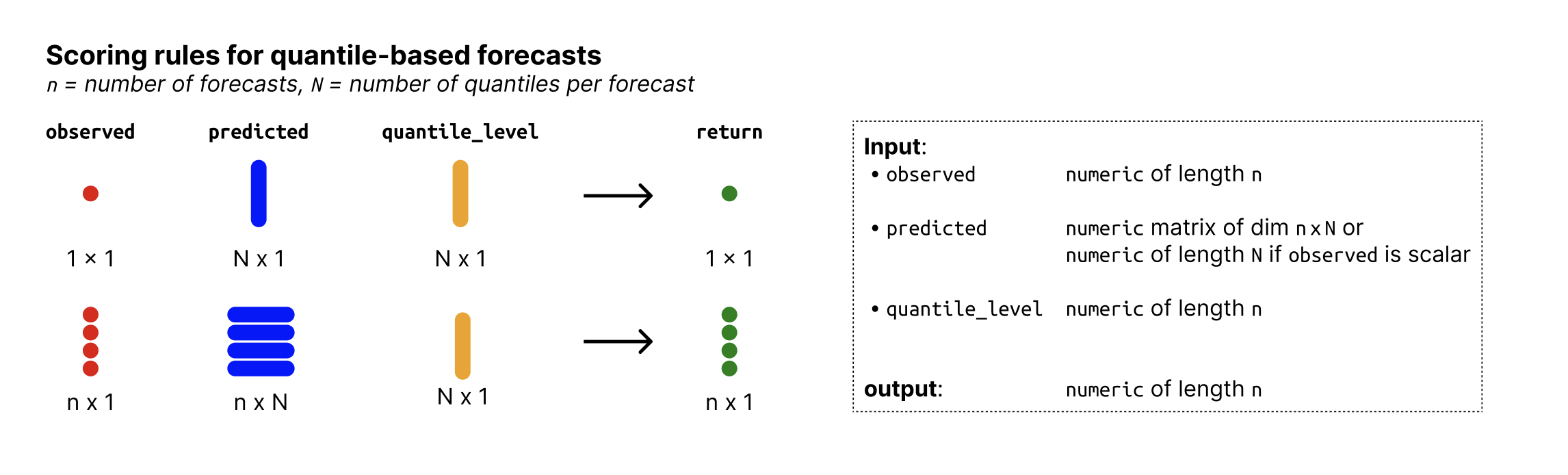

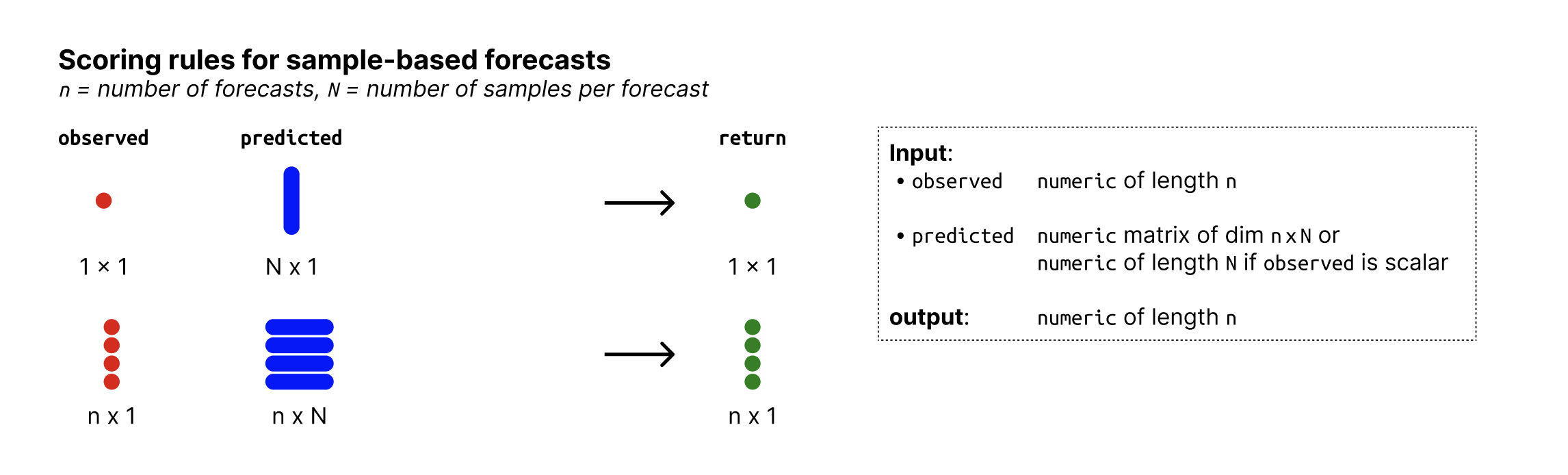

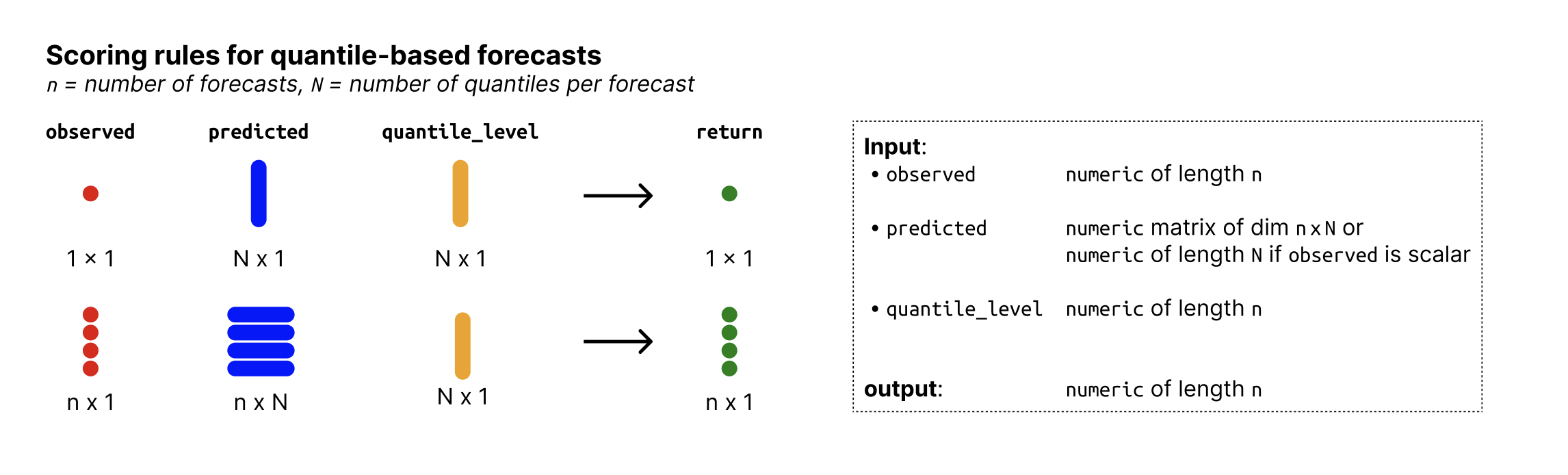

Input format

Overview of required input format for quantile-based forecasts

See Also

Examples

observed <- rnorm(30, mean = 1:30)

predicted_values <- replicate(3, rnorm(30, mean = 1:30))

ae_median_quantile(

observed, predicted_values, quantile_level = c(0.2, 0.5, 0.8)

)

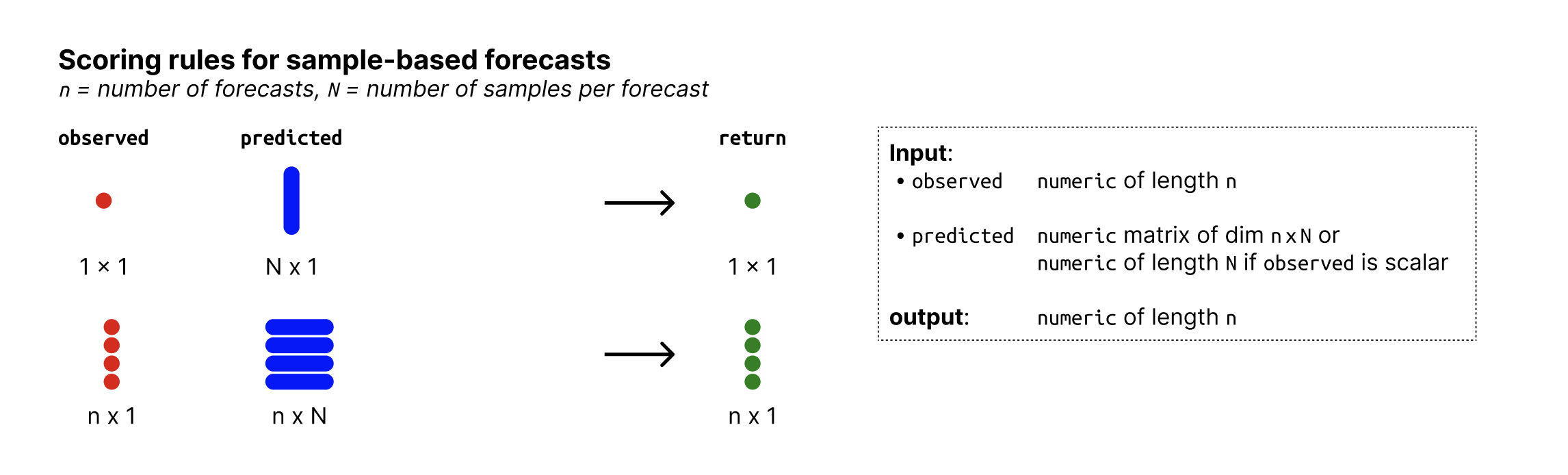

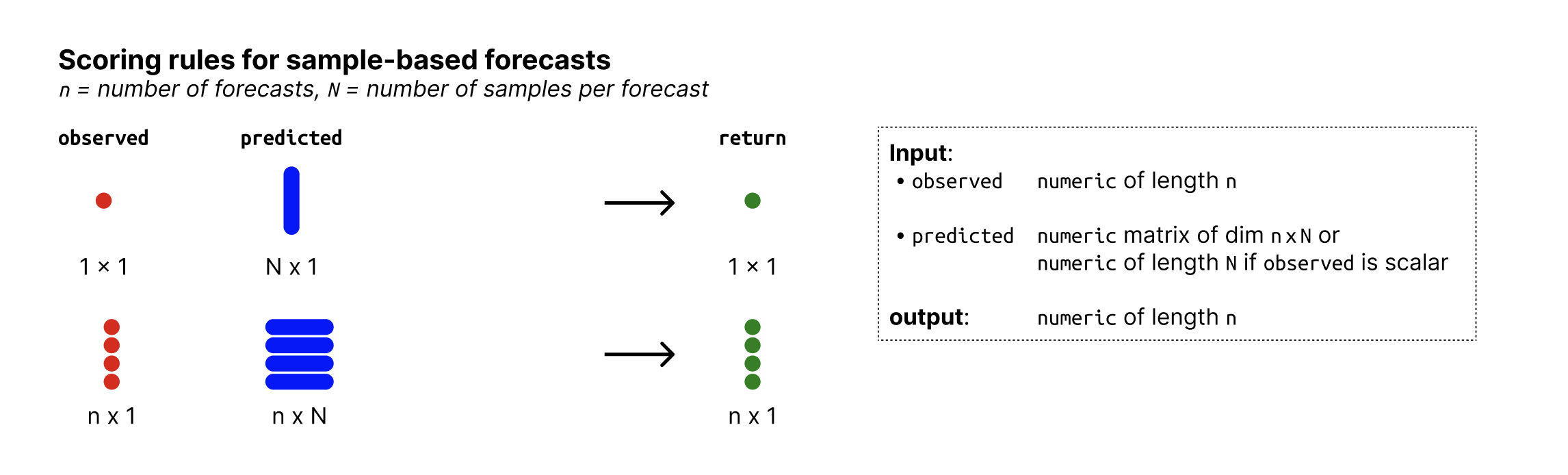

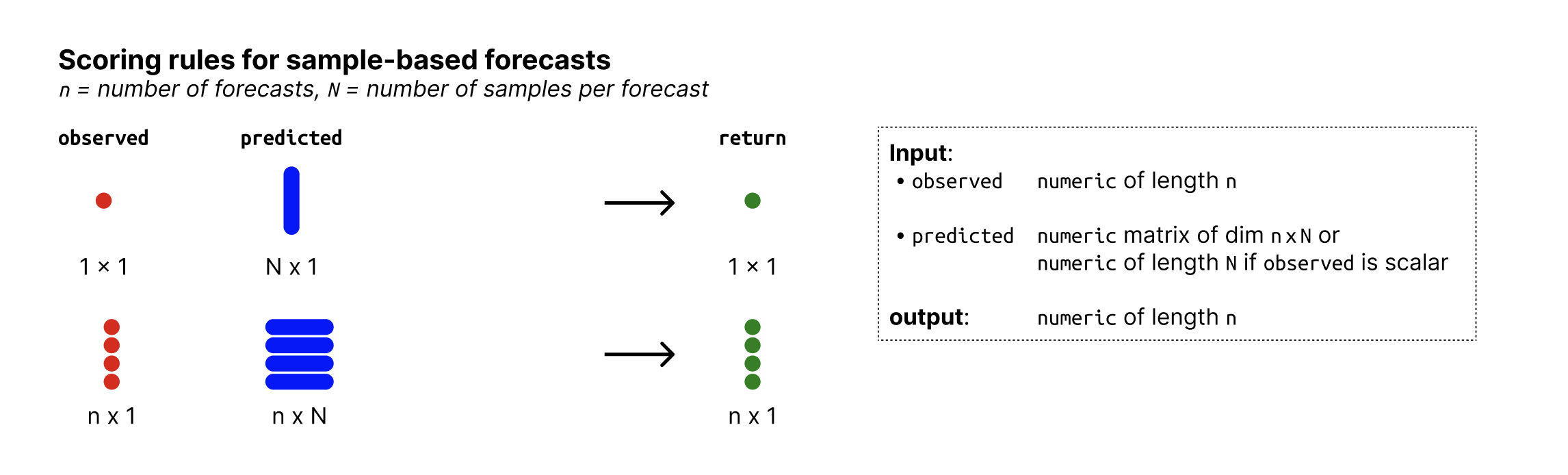

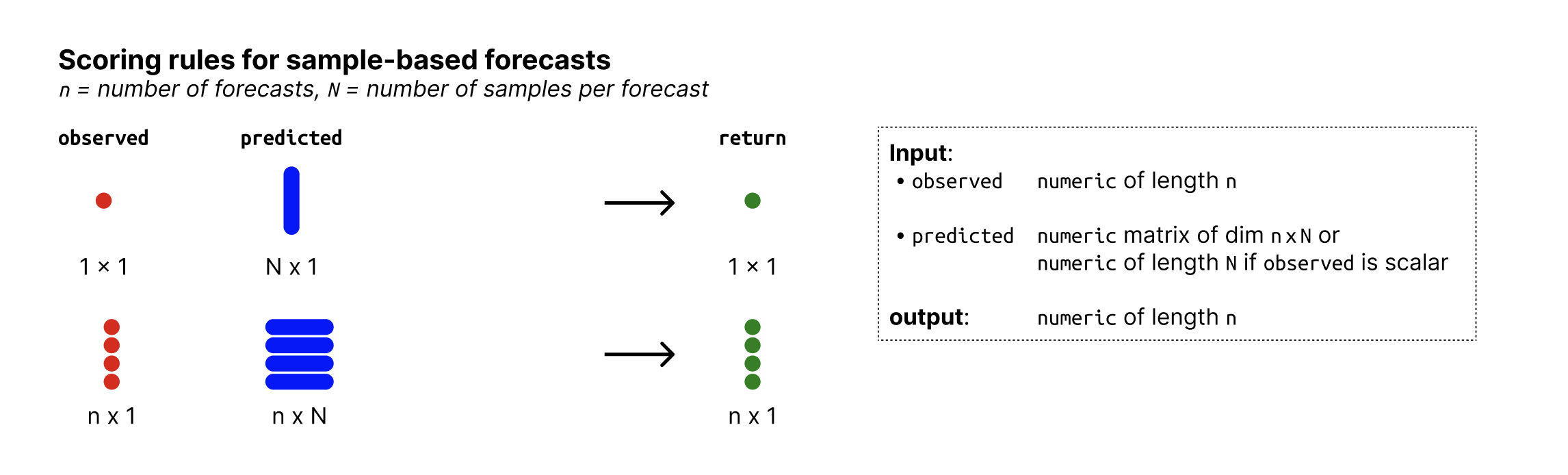

Absolute error of the median (sample-based version)

Description

Absolute error of the median calculated as

|\text{observed} - \text{median prediction}|

where the median prediction is calculated as the median of the predictive samples.

Usage

ae_median_sample(observed, predicted)

Arguments

observed |

A vector with observed values of size n |

predicted |

nxN matrix of predictive samples, n (number of rows) being

the number of data points and N (number of columns) the number of Monte

Carlo samples. Alternatively, |

Value

Numeric vector of length n with the absolute errors of the median.

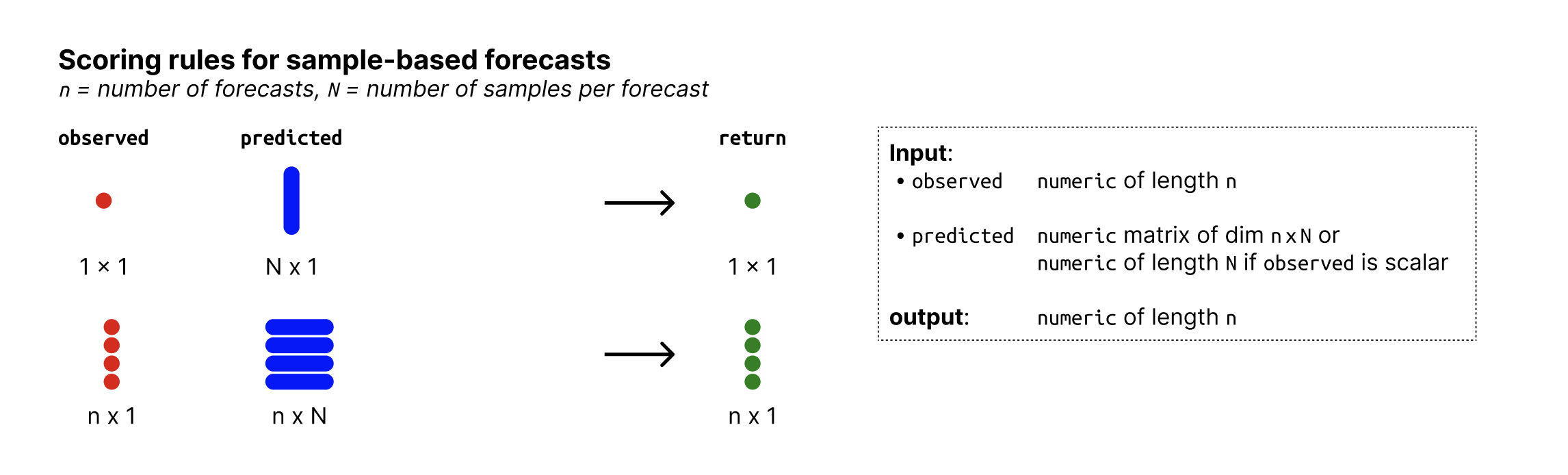

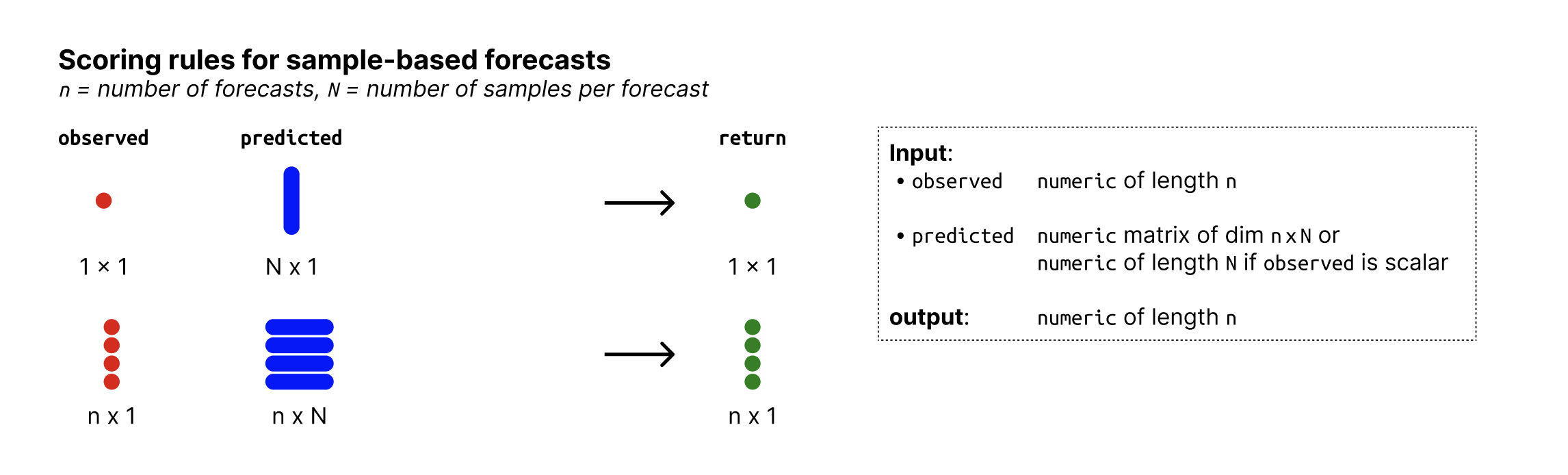

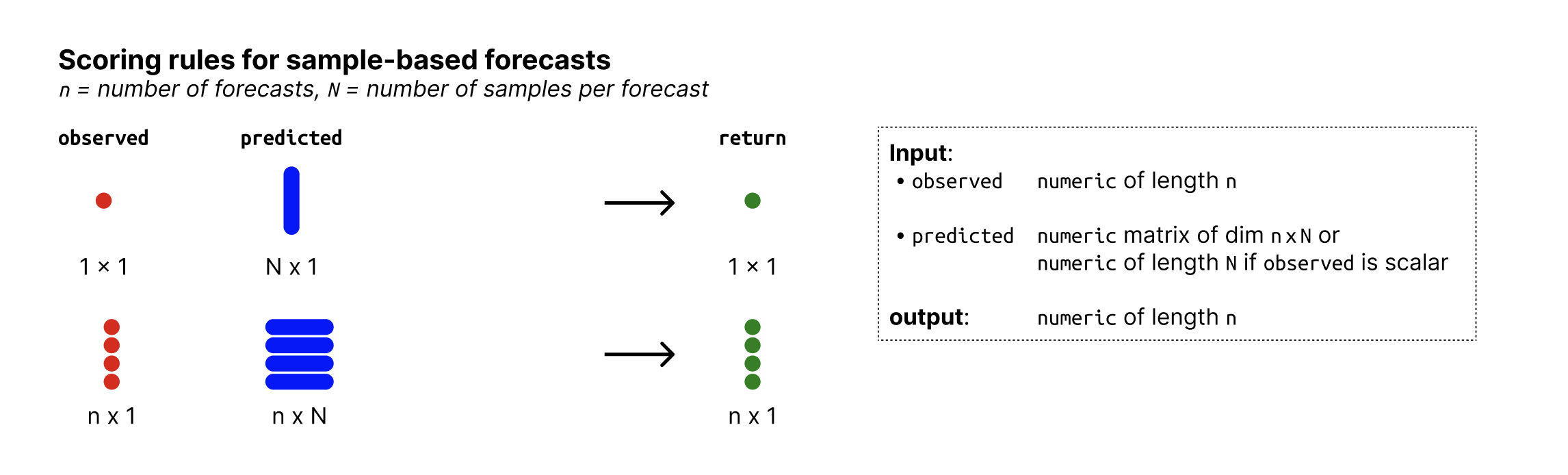

Input format

Overview of required input format for sample-based forecasts

See Also

Examples

observed <- rnorm(30, mean = 1:30)

predicted_values <- matrix(rnorm(30, mean = 1:30))

ae_median_sample(observed, predicted_values)

Apply a list of functions to a data table of forecasts

Description

This helper function applies scoring rules (stored as a list of

functions) to a data table of forecasts. apply_metrics is used within

score() to apply all scoring rules to the data.

Scoring rules are wrapped in run_safely() to catch errors and to make

sure that only arguments are passed to the scoring rule that are actually

accepted by it.

Usage

apply_metrics(forecast, metrics, ...)

Arguments

forecast |

A forecast object (a validated data.table with predicted and observed values). |

metrics |

A named list of scoring functions. Names will be used as

column names in the output. See |

... |

Additional arguments to be passed to the scoring rules. Note that

this is currently not used, as all calls to |

Value

A data table with the forecasts and the calculated metrics.

Create a forecast object for binary forecasts

Description

Process and validate a data.frame (or similar) or similar with forecasts

and observations. If the input passes all input checks, those functions will

be converted to a forecast object. A forecast object is a data.table with

a class forecast and an additional class that depends on the forecast type.

The arguments observed, predicted, etc. make it possible to rename

existing columns of the input data to match the required columns for a

forecast object. Using the argument forecast_unit, you can specify

the columns that uniquely identify a single forecast (and thereby removing

other, unneeded columns. See section "Forecast Unit" below for details).

Usage

as_forecast_binary(data, ...)

## Default S3 method:

as_forecast_binary(

data,

forecast_unit = NULL,

observed = NULL,

predicted = NULL,

...

)

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

... |

Unused |

forecast_unit |

(optional) Name of the columns in |

observed |

(optional) Name of the column in |

predicted |

(optional) Name of the column in |

Value

A forecast object of class forecast_binary

Required input

The input needs to be a data.frame or similar with the following columns:

-

observed:factorwith exactly two levels representing the observed values. The highest factor level is assumed to be the reference level. This means that corresponding value inpredictedrepresent the probability that the observed value is equal to the highest factor level. -

predicted:numericwith predicted probabilities, representing the probability that the corresponding value inobservedis equal to the highest available factor level.

For convenience, we recommend an additional column model holding the name

of the forecaster or model that produced a prediction, but this is not

strictly necessary.

See the example_binary data set for an example.

Forecast unit

In order to score forecasts, scoringutils needs to know which of the rows

of the data belong together and jointly form a single forecasts. This is

easy e.g. for point forecast, where there is one row per forecast. For

quantile or sample-based forecasts, however, there are multiple rows that

belong to a single forecast.

The forecast unit or unit of a single forecast is then described by the

combination of columns that uniquely identify a single forecast.

For example, we could have forecasts made by different models in various

locations at different time points, each for several weeks into the future.

The forecast unit could then be described as

forecast_unit = c("model", "location", "forecast_date", "forecast_horizon").

scoringutils automatically tries to determine the unit of a single

forecast. It uses all existing columns for this, which means that no columns

must be present that are unrelated to the forecast unit. As a very simplistic

example, if you had an additional row, "even", that is one if the row number

is even and zero otherwise, then this would mess up scoring as scoringutils

then thinks that this column was relevant in defining the forecast unit.

In order to avoid issues, we recommend setting the forecast unit explicitly,

using the forecast_unit argument. This will simply drop unneeded columns,

while making sure that all necessary, 'protected columns' like "predicted"

or "observed" are retained.

See Also

Other functions to create forecast objects:

as_forecast_nominal(),

as_forecast_ordinal(),

as_forecast_point(),

as_forecast_quantile(),

as_forecast_sample()

Examples

as_forecast_binary(

example_binary,

predicted = "predicted",

forecast_unit = c("model", "target_type", "target_end_date",

"horizon", "location")

)

General information on creating a forecast object

Description

Process and validate a data.frame (or similar) or similar with forecasts

and observations. If the input passes all input checks, those functions will

be converted to a forecast object. A forecast object is a data.table with

a class forecast and an additional class that depends on the forecast type.

The arguments observed, predicted, etc. make it possible to rename

existing columns of the input data to match the required columns for a

forecast object. Using the argument forecast_unit, you can specify

the columns that uniquely identify a single forecast (and thereby removing

other, unneeded columns. See section "Forecast Unit" below for details).

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

forecast_unit |

(optional) Name of the columns in |

observed |

(optional) Name of the column in |

predicted |

(optional) Name of the column in |

Forecast unit

In order to score forecasts, scoringutils needs to know which of the rows

of the data belong together and jointly form a single forecasts. This is

easy e.g. for point forecast, where there is one row per forecast. For

quantile or sample-based forecasts, however, there are multiple rows that

belong to a single forecast.

The forecast unit or unit of a single forecast is then described by the

combination of columns that uniquely identify a single forecast.

For example, we could have forecasts made by different models in various

locations at different time points, each for several weeks into the future.

The forecast unit could then be described as

forecast_unit = c("model", "location", "forecast_date", "forecast_horizon").

scoringutils automatically tries to determine the unit of a single

forecast. It uses all existing columns for this, which means that no columns

must be present that are unrelated to the forecast unit. As a very simplistic

example, if you had an additional row, "even", that is one if the row number

is even and zero otherwise, then this would mess up scoring as scoringutils

then thinks that this column was relevant in defining the forecast unit.

In order to avoid issues, we recommend setting the forecast unit explicitly,

using the forecast_unit argument. This will simply drop unneeded columns,

while making sure that all necessary, 'protected columns' like "predicted"

or "observed" are retained.

Common functionality for as_forecast_<type> functions

Description

Common functionality for as_forecast_<type> functions

Usage

as_forecast_generic(data, forecast_unit = NULL, ...)

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

forecast_unit |

(optional) Name of the columns in |

... |

Named arguments that are used to rename columns. The names of the arguments are the names of the columns that should be renamed. The values are the new names. |

Details

This function splits out part of the functionality of

as_forecast_<type> that is the same for all as_forecast_<type> functions.

It renames the required columns, where appropriate, and sets the forecast

unit.

Create a forecast object for nominal forecasts

Description

Process and validate a data.frame (or similar) or similar with forecasts

and observations. If the input passes all input checks, those functions will

be converted to a forecast object. A forecast object is a data.table with

a class forecast and an additional class that depends on the forecast type.

The arguments observed, predicted, etc. make it possible to rename

existing columns of the input data to match the required columns for a

forecast object. Using the argument forecast_unit, you can specify

the columns that uniquely identify a single forecast (and thereby removing

other, unneeded columns. See section "Forecast Unit" below for details).

Usage

as_forecast_nominal(data, ...)

## Default S3 method:

as_forecast_nominal(

data,

forecast_unit = NULL,

observed = NULL,

predicted = NULL,

predicted_label = NULL,

...

)

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

... |

Unused |

forecast_unit |

(optional) Name of the columns in |

observed |

(optional) Name of the column in |

predicted |

(optional) Name of the column in |

predicted_label |

(optional) Name of the column in |

Details

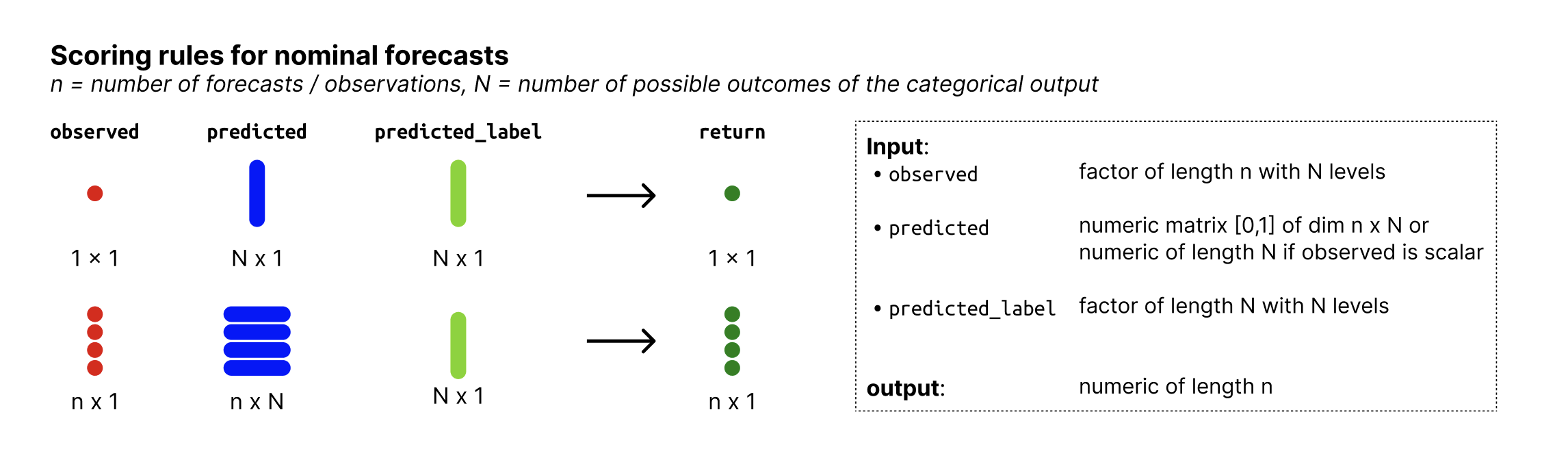

Nominal forecasts are a form of categorical forecasts and represent a generalisation of binary forecasts to multiple outcomes. The possible outcomes that the observed values can assume are not ordered.

Value

A forecast object of class forecast_nominal

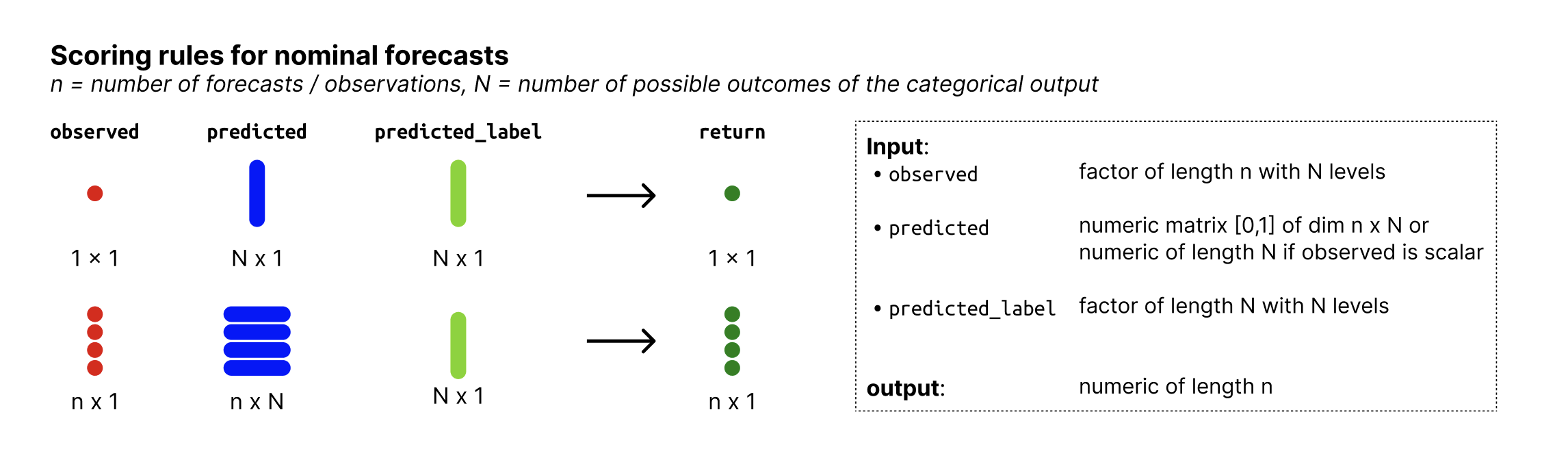

Required input

The input needs to be a data.frame or similar for the default method with the following columns:

-

observed: Column with observed values of typefactorwith N levels, where N is the number of possible outcomes. The levels of the factor represent the possible outcomes that the observed values can assume. -

predicted:numericcolumn with predicted probabilities. The values represent the probability that the observed value is equal to the factor level denoted inpredicted_label. Note that forecasts must be complete, i.e. there must be a probability assigned to every possible outcome and those probabilities must sum to one. -

predicted_label:factorwith N levels, denoting the outcome that the probabilities inpredictedcorrespond to.

For convenience, we recommend an additional column model holding the name

of the forecaster or model that produced a prediction, but this is not

strictly necessary.

See the example_nominal data set for an example.

Forecast unit

In order to score forecasts, scoringutils needs to know which of the rows

of the data belong together and jointly form a single forecasts. This is

easy e.g. for point forecast, where there is one row per forecast. For

quantile or sample-based forecasts, however, there are multiple rows that

belong to a single forecast.

The forecast unit or unit of a single forecast is then described by the

combination of columns that uniquely identify a single forecast.

For example, we could have forecasts made by different models in various

locations at different time points, each for several weeks into the future.

The forecast unit could then be described as

forecast_unit = c("model", "location", "forecast_date", "forecast_horizon").

scoringutils automatically tries to determine the unit of a single

forecast. It uses all existing columns for this, which means that no columns

must be present that are unrelated to the forecast unit. As a very simplistic

example, if you had an additional row, "even", that is one if the row number

is even and zero otherwise, then this would mess up scoring as scoringutils

then thinks that this column was relevant in defining the forecast unit.

In order to avoid issues, we recommend setting the forecast unit explicitly,

using the forecast_unit argument. This will simply drop unneeded columns,

while making sure that all necessary, 'protected columns' like "predicted"

or "observed" are retained.

See Also

Other functions to create forecast objects:

as_forecast_binary(),

as_forecast_ordinal(),

as_forecast_point(),

as_forecast_quantile(),

as_forecast_sample()

Examples

as_forecast_nominal(

na.omit(example_nominal),

predicted = "predicted",

forecast_unit = c("model", "target_type", "target_end_date",

"horizon", "location")

)

Create a forecast object for ordinal forecasts

Description

Process and validate a data.frame (or similar) or similar with forecasts

and observations. If the input passes all input checks, those functions will

be converted to a forecast object. A forecast object is a data.table with

a class forecast and an additional class that depends on the forecast type.

The arguments observed, predicted, etc. make it possible to rename

existing columns of the input data to match the required columns for a

forecast object. Using the argument forecast_unit, you can specify

the columns that uniquely identify a single forecast (and thereby removing

other, unneeded columns. See section "Forecast Unit" below for details).

Usage

as_forecast_ordinal(data, ...)

## Default S3 method:

as_forecast_ordinal(

data,

forecast_unit = NULL,

observed = NULL,

predicted = NULL,

predicted_label = NULL,

...

)

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

... |

Unused |

forecast_unit |

(optional) Name of the columns in |

observed |

(optional) Name of the column in |

predicted |

(optional) Name of the column in |

predicted_label |

(optional) Name of the column in |

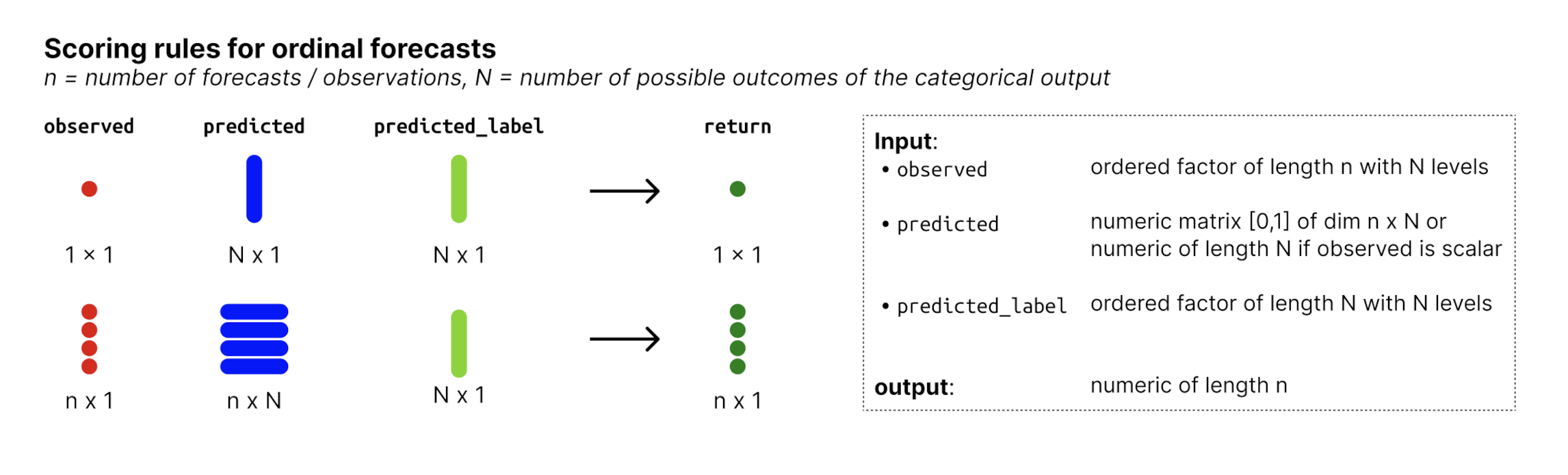

Details

Ordinal forecasts are a form of categorical forecasts and represent a generalisation of binary forecasts to multiple outcomes. The possible outcomes that the observed values can assume are ordered.

Value

A forecast object of class forecast_ordinal

Required input

The input needs to be a data.frame or similar for the default method with the following columns:

-

observed: Column with observed values of typefactorwith N ordered levels, where N is the number of possible outcomes. The levels of the factor represent the possible outcomes that the observed values can assume. -

predicted:numericcolumn with predicted probabilities. The values represent the probability that the observed value is equal to the factor level denoted inpredicted_label. Note that forecasts must be complete, i.e. there must be a probability assigned to every possible outcome and those probabilities must sum to one. -

predicted_label:factorwith N levels, denoting the outcome that the probabilities inpredictedcorrespond to.

For convenience, we recommend an additional column model holding the name

of the forecaster or model that produced a prediction, but this is not

strictly necessary.

See the example_ordinal data set for an example.

Forecast unit

In order to score forecasts, scoringutils needs to know which of the rows

of the data belong together and jointly form a single forecasts. This is

easy e.g. for point forecast, where there is one row per forecast. For

quantile or sample-based forecasts, however, there are multiple rows that

belong to a single forecast.

The forecast unit or unit of a single forecast is then described by the

combination of columns that uniquely identify a single forecast.

For example, we could have forecasts made by different models in various

locations at different time points, each for several weeks into the future.

The forecast unit could then be described as

forecast_unit = c("model", "location", "forecast_date", "forecast_horizon").

scoringutils automatically tries to determine the unit of a single

forecast. It uses all existing columns for this, which means that no columns

must be present that are unrelated to the forecast unit. As a very simplistic

example, if you had an additional row, "even", that is one if the row number

is even and zero otherwise, then this would mess up scoring as scoringutils

then thinks that this column was relevant in defining the forecast unit.

In order to avoid issues, we recommend setting the forecast unit explicitly,

using the forecast_unit argument. This will simply drop unneeded columns,

while making sure that all necessary, 'protected columns' like "predicted"

or "observed" are retained.

See Also

Other functions to create forecast objects:

as_forecast_binary(),

as_forecast_nominal(),

as_forecast_point(),

as_forecast_quantile(),

as_forecast_sample()

Examples

as_forecast_ordinal(

na.omit(example_ordinal),

predicted = "predicted",

forecast_unit = c("model", "target_type", "target_end_date",

"horizon", "location")

)

Create a forecast object for point forecasts

Description

When converting a forecast_quantile object into a forecast_point object,

the 0.5 quantile is extracted and returned as the point forecast.

Usage

as_forecast_point(data, ...)

## Default S3 method:

as_forecast_point(

data,

forecast_unit = NULL,

observed = NULL,

predicted = NULL,

...

)

## S3 method for class 'forecast_quantile'

as_forecast_point(data, ...)

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

... |

Unused |

forecast_unit |

(optional) Name of the columns in |

observed |

(optional) Name of the column in |

predicted |

(optional) Name of the column in |

Value

A forecast object of class forecast_point

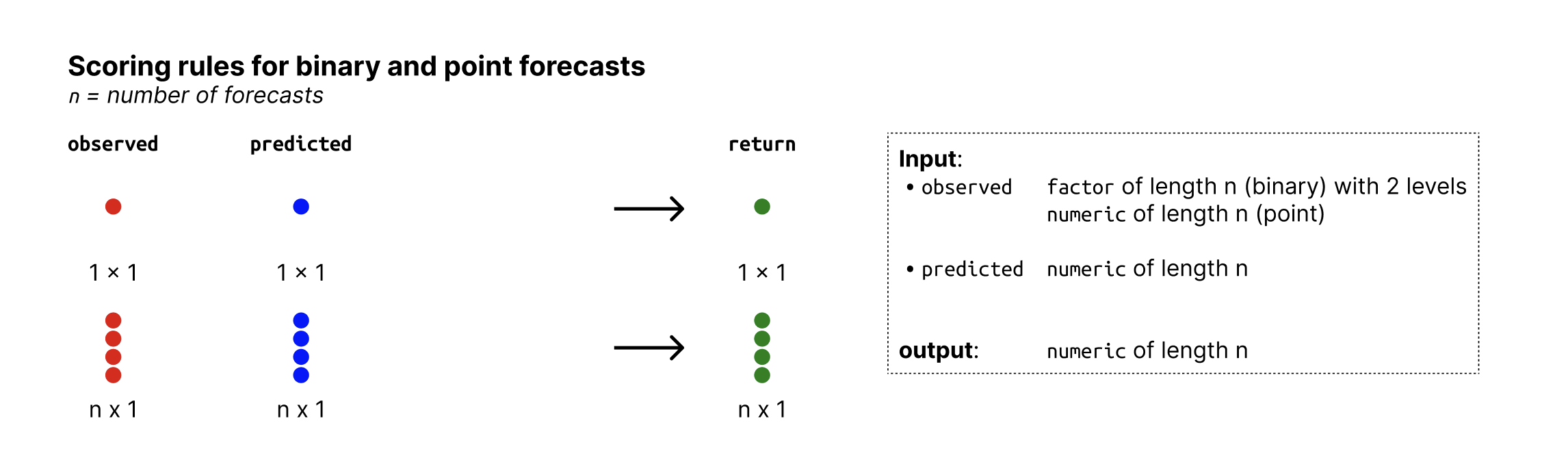

Required input

The input needs to be a data.frame or similar for the default method with the following columns:

-

observed: Column of typenumericwith observed values. -

predicted: Column of typenumericwith predicted values.

For convenience, we recommend an additional column model holding the name

of the forecaster or model that produced a prediction, but this is not

strictly necessary.

See the example_point data set for an example.

See Also

Other functions to create forecast objects:

as_forecast_binary(),

as_forecast_nominal(),

as_forecast_ordinal(),

as_forecast_quantile(),

as_forecast_sample()

Create a forecast object for quantile-based forecasts

Description

Process and validate a data.frame (or similar) or similar with forecasts

and observations. If the input passes all input checks, those functions will

be converted to a forecast object. A forecast object is a data.table with

a class forecast and an additional class that depends on the forecast type.

The arguments observed, predicted, etc. make it possible to rename

existing columns of the input data to match the required columns for a

forecast object. Using the argument forecast_unit, you can specify

the columns that uniquely identify a single forecast (and thereby removing

other, unneeded columns. See section "Forecast Unit" below for details).

Usage

as_forecast_quantile(data, ...)

## Default S3 method:

as_forecast_quantile(

data,

forecast_unit = NULL,

observed = NULL,

predicted = NULL,

quantile_level = NULL,

...

)

## S3 method for class 'forecast_sample'

as_forecast_quantile(

data,

probs = c(0.05, 0.25, 0.5, 0.75, 0.95),

type = 7,

...

)

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

... |

Unused |

forecast_unit |

(optional) Name of the columns in |

observed |

(optional) Name of the column in |

predicted |

(optional) Name of the column in |

quantile_level |

(optional) Name of the column in |

probs |

A numeric vector of quantile levels for which

quantiles will be computed. Corresponds to the |

type |

Type argument passed down to the quantile function. For more

information, see |

Value

A forecast object of class forecast_quantile

Required input

The input needs to be a data.frame or similar for the default method with the following columns:

-

observed: Column of typenumericwith observed values. -

predicted: Column of typenumericwith predicted values. Predicted values represent quantiles of the predictive distribution. -

quantile_level: Column of typenumeric, denoting the quantile level of the corresponding predicted value. Quantile levels must be between 0 and 1.

For convenience, we recommend an additional column model holding the name

of the forecaster or model that produced a prediction, but this is not

strictly necessary.

See the example_quantile data set for an example.

Converting from forecast_sample to forecast_quantile

When creating a forecast_quantile object from a forecast_sample object,

the quantiles are estimated by computing empircal quantiles from the samples

via quantile(). Note that empirical quantiles are a biased estimator for

the true quantiles in particular in the tails of the distribution and

when the number of available samples is low.

Forecast unit

In order to score forecasts, scoringutils needs to know which of the rows

of the data belong together and jointly form a single forecasts. This is

easy e.g. for point forecast, where there is one row per forecast. For

quantile or sample-based forecasts, however, there are multiple rows that

belong to a single forecast.

The forecast unit or unit of a single forecast is then described by the

combination of columns that uniquely identify a single forecast.

For example, we could have forecasts made by different models in various

locations at different time points, each for several weeks into the future.

The forecast unit could then be described as

forecast_unit = c("model", "location", "forecast_date", "forecast_horizon").

scoringutils automatically tries to determine the unit of a single

forecast. It uses all existing columns for this, which means that no columns

must be present that are unrelated to the forecast unit. As a very simplistic

example, if you had an additional row, "even", that is one if the row number

is even and zero otherwise, then this would mess up scoring as scoringutils

then thinks that this column was relevant in defining the forecast unit.

In order to avoid issues, we recommend setting the forecast unit explicitly,

using the forecast_unit argument. This will simply drop unneeded columns,

while making sure that all necessary, 'protected columns' like "predicted"

or "observed" are retained.

See Also

Other functions to create forecast objects:

as_forecast_binary(),

as_forecast_nominal(),

as_forecast_ordinal(),

as_forecast_point(),

as_forecast_sample()

Examples

as_forecast_quantile(

example_quantile,

predicted = "predicted",

forecast_unit = c("model", "target_type", "target_end_date",

"horizon", "location")

)

Create a forecast object for sample-based forecasts

Description

Process and validate a data.frame (or similar) or similar with forecasts

and observations. If the input passes all input checks, those functions will

be converted to a forecast object. A forecast object is a data.table with

a class forecast and an additional class that depends on the forecast type.

The arguments observed, predicted, etc. make it possible to rename

existing columns of the input data to match the required columns for a

forecast object. Using the argument forecast_unit, you can specify

the columns that uniquely identify a single forecast (and thereby removing

other, unneeded columns. See section "Forecast Unit" below for details).

Usage

as_forecast_sample(data, ...)

## Default S3 method:

as_forecast_sample(

data,

forecast_unit = NULL,

observed = NULL,

predicted = NULL,

sample_id = NULL,

...

)

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

... |

Unused |

forecast_unit |

(optional) Name of the columns in |

observed |

(optional) Name of the column in |

predicted |

(optional) Name of the column in |

sample_id |

(optional) Name of the column in |

Value

A forecast object of class forecast_sample

Required input

The input needs to be a data.frame or similar for the default method with the following columns:

-

observed: Column of typenumericwith observed values. -

predicted: Column of typenumericwith predicted values. Predicted values represent random samples from the predictive distribution. -

sample_id: Column of any type with unique identifiers (unique within a single forecast) for each sample.

For convenience, we recommend an additional column model holding the name

of the forecaster or model that produced a prediction, but this is not

strictly necessary.

See the example_sample_continuous and example_sample_discrete data set for an example

Forecast unit

In order to score forecasts, scoringutils needs to know which of the rows

of the data belong together and jointly form a single forecasts. This is

easy e.g. for point forecast, where there is one row per forecast. For

quantile or sample-based forecasts, however, there are multiple rows that

belong to a single forecast.

The forecast unit or unit of a single forecast is then described by the

combination of columns that uniquely identify a single forecast.

For example, we could have forecasts made by different models in various

locations at different time points, each for several weeks into the future.

The forecast unit could then be described as

forecast_unit = c("model", "location", "forecast_date", "forecast_horizon").

scoringutils automatically tries to determine the unit of a single

forecast. It uses all existing columns for this, which means that no columns

must be present that are unrelated to the forecast unit. As a very simplistic

example, if you had an additional row, "even", that is one if the row number

is even and zero otherwise, then this would mess up scoring as scoringutils

then thinks that this column was relevant in defining the forecast unit.

In order to avoid issues, we recommend setting the forecast unit explicitly,

using the forecast_unit argument. This will simply drop unneeded columns,

while making sure that all necessary, 'protected columns' like "predicted"

or "observed" are retained.

See Also

Other functions to create forecast objects:

as_forecast_binary(),

as_forecast_nominal(),

as_forecast_ordinal(),

as_forecast_point(),

as_forecast_quantile()

Create an object of class scores from data

Description

This convenience function wraps new_scores() and validates

the scores object.

Usage

as_scores(scores, metrics)

Arguments

scores |

A data.table or similar with scores as produced by |

metrics |

A character vector with the names of the scores (i.e. the names of the scoring rules used for scoring). |

Value

An object of class scores

Assert Inputs Have Matching Dimensions

Description

Function assesses whether input dimensions match. In the following, n is the number of observations / forecasts. Scalar values may be repeated to match the length of the other input. Allowed options are therefore:

-

observedis vector of length 1 or length n -

predictedis:a vector of of length 1 or length n

a matrix with n rows and 1 column

Usage

assert_dims_ok_point(observed, predicted)

Arguments

observed |

Input to be checked. Should be a factor of length n with

exactly two levels, holding the observed values.

The highest factor level is assumed to be the reference level. This means

that |

predicted |

Input to be checked. |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Assert that input is a forecast object and passes validations

Description

Assert that an object is a forecast object (i.e. a data.table with a class

forecast and an additional class forecast_<type> corresponding to the

forecast type).

See the corresponding assert_forecast_<type> functions for more details on

the required input formats.

Usage

## S3 method for class 'forecast_binary'

assert_forecast(forecast, forecast_type = NULL, verbose = TRUE, ...)

## S3 method for class 'forecast_point'

assert_forecast(forecast, forecast_type = NULL, verbose = TRUE, ...)

## S3 method for class 'forecast_quantile'

assert_forecast(forecast, forecast_type = NULL, verbose = TRUE, ...)

## S3 method for class 'forecast_sample'

assert_forecast(forecast, forecast_type = NULL, verbose = TRUE, ...)

assert_forecast(forecast, forecast_type = NULL, verbose = TRUE, ...)

## Default S3 method:

assert_forecast(forecast, forecast_type = NULL, verbose = TRUE, ...)

Arguments

forecast |

A forecast object (a validated data.table with predicted and observed values). |

forecast_type |

(optional) The forecast type you expect the forecasts

to have. If the forecast type as determined by |

verbose |

Logical. If |

... |

Currently unused. You cannot pass additional arguments to scoring

functions via |

Value

Returns NULL invisibly.

Examples

forecast <- as_forecast_binary(example_binary)

assert_forecast(forecast)

Validation common to all forecast types

Description

The function runs input checks that apply to all input data, regardless of forecast type. The function

asserts that the forecast is a data.table which has columns

observedandpredictedchecks the forecast type and forecast unit

checks there are no duplicate forecasts

if appropriate, checks the number of samples / quantiles is the same for all forecasts.

Usage

assert_forecast_generic(data, verbose = TRUE)

Arguments

data |

A data.table with forecasts and observed values that should be validated. |

verbose |

Logical. If |

Value

returns the input

Assert that forecast type is as expected

Description

Assert that forecast type is as expected

Usage

assert_forecast_type(data, actual = get_forecast_type(data), desired = NULL)

Arguments

data |

A forecast object. |

actual |

The actual forecast type of the data |

desired |

The desired forecast type of the data |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Assert that inputs are correct for binary forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring binary forecasts.

Usage

assert_input_binary(observed, predicted)

Arguments

observed |

Input to be checked. Should be a factor of length n with

exactly two levels, holding the observed values.

The highest factor level is assumed to be the reference level. This means

that |

predicted |

Input to be checked. |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Assert that inputs are correct for categorical forecasts

Description

Function assesses whether the inputs correspond to the requirements for scoring categorical, i.e. either nominal or ordinal forecasts.

Usage

assert_input_categorical(observed, predicted, predicted_label, ordered = NA)

Arguments

observed |

Input to be checked. Should be a factor of length n with N levels holding the observed values. n is the number of observations and N is the number of possible outcomes the observed values can assume. |

predicted |

Input to be checked. Should be nxN matrix of predicted

probabilities, n (number of rows) being the number of data points and N

(number of columns) the number of possible outcomes the observed values

can assume.

If |

predicted_label |

Factor of length N with N levels, where N is the number of possible outcomes the observed values can assume. |

ordered |

Value indicating whether factors have to be ordered or not.

Defaults to |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Assert that inputs are correct for interval-based forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring interval-based forecasts.

Usage

assert_input_interval(observed, lower, upper, interval_range)

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

lower |

Input to be checked. Should be a numeric vector of size n that holds the predicted value for the lower bounds of the prediction intervals. |

upper |

Input to be checked. Should be a numeric vector of size n that holds the predicted value for the upper bounds of the prediction intervals. |

interval_range |

Input to be checked. Should be a vector of size n that denotes the interval range in percent. E.g. a value of 50 denotes a (25%, 75%) prediction interval. |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Assert that inputs are correct for nominal forecasts

Description

Function assesses whether the inputs correspond to the requirements for scoring nominal forecasts.

Usage

assert_input_nominal(observed, predicted, predicted_label)

Arguments

observed |

Input to be checked. Should be an unordered factor of length n with N levels holding the observed values. n is the number of observations and N is the number of possible outcomes the observed values can assume. |

predicted |

Input to be checked. Should be nxN matrix of predicted

probabilities, n (number of rows) being the number of data points and N

(number of columns) the number of possible outcomes the observed values

can assume.

If |

predicted_label |

Unordered factor of length N with N levels, where N is the number of possible outcomes the observed values can assume. |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Assert that inputs are correct for ordinal forecasts

Description

Function assesses whether the inputs correspond to the requirements for scoring ordinal forecasts.

Usage

assert_input_ordinal(observed, predicted, predicted_label)

Arguments

observed |

Input to be checked. Should be an ordered factor of length n with N levels holding the observed values. n is the number of observations and N is the number of possible outcomes the observed values can assume. |

predicted |

Input to be checked. Should be nxN matrix of predicted

probabilities, n (number of rows) being the number of data points and N

(number of columns) the number of possible outcomes the observed values

can assume.

If |

predicted_label |

Ordered factor of length N with N levels, where N is the number of possible outcomes the observed values can assume. |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

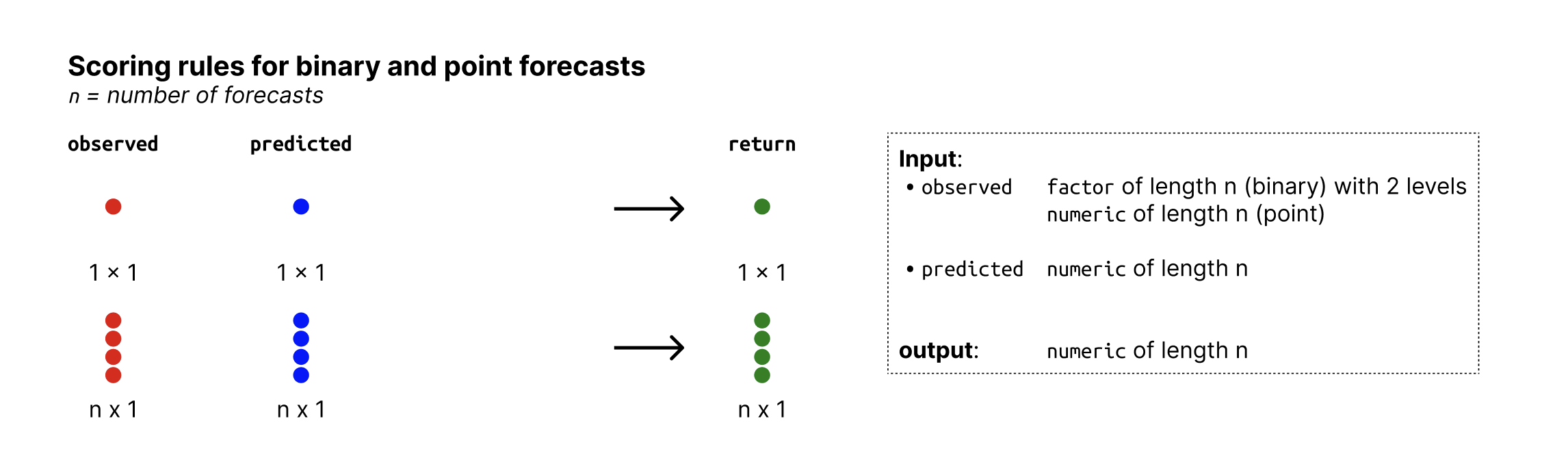

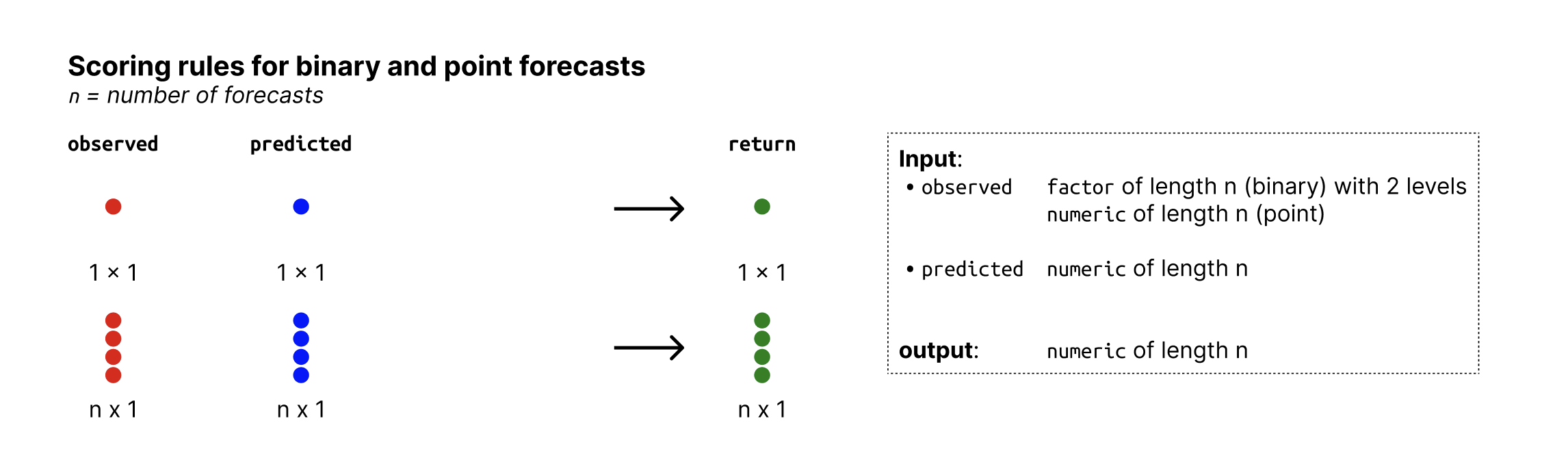

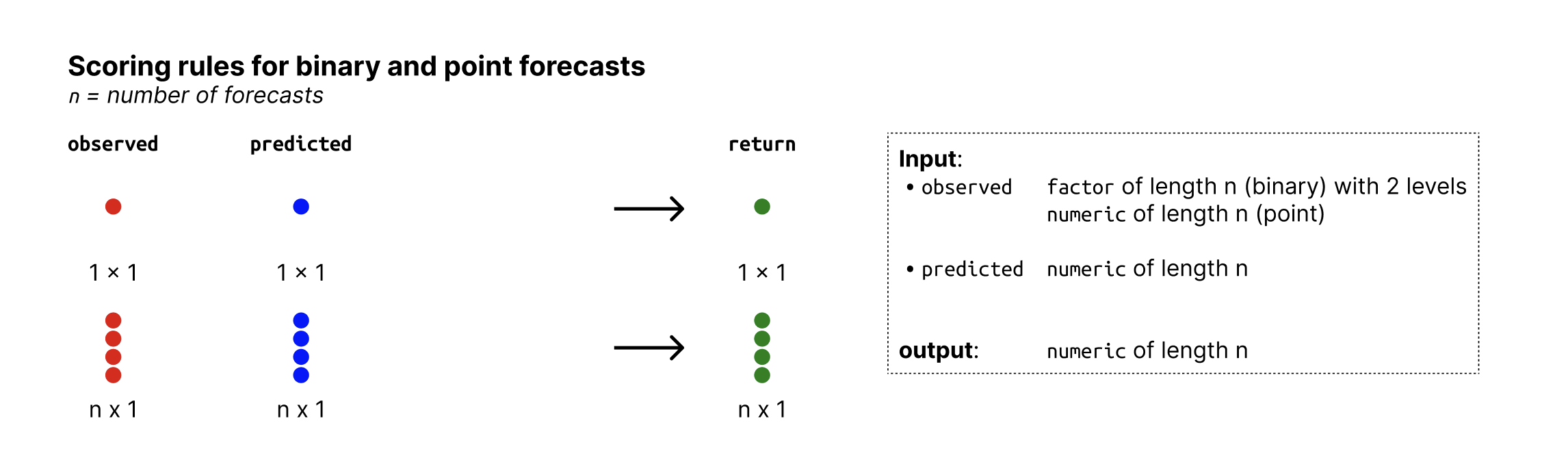

Assert that inputs are correct for point forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring point forecasts.

Usage

assert_input_point(observed, predicted)

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

predicted |

Input to be checked. Should be a numeric vector with the predicted values of size n. |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Assert that inputs are correct for quantile-based forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring quantile-based forecasts.

Usage

assert_input_quantile(

observed,

predicted,

quantile_level,

unique_quantile_levels = TRUE

)

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

predicted |

Input to be checked. Should be nxN matrix of predictive

quantiles, n (number of rows) being the number of data points and N

(number of columns) the number of quantiles per forecast.

If |

quantile_level |

Input to be checked. Should be a vector of size N that denotes the quantile levels corresponding to the columns of the prediction matrix. |

unique_quantile_levels |

Whether the quantile levels are required to be

unique ( |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

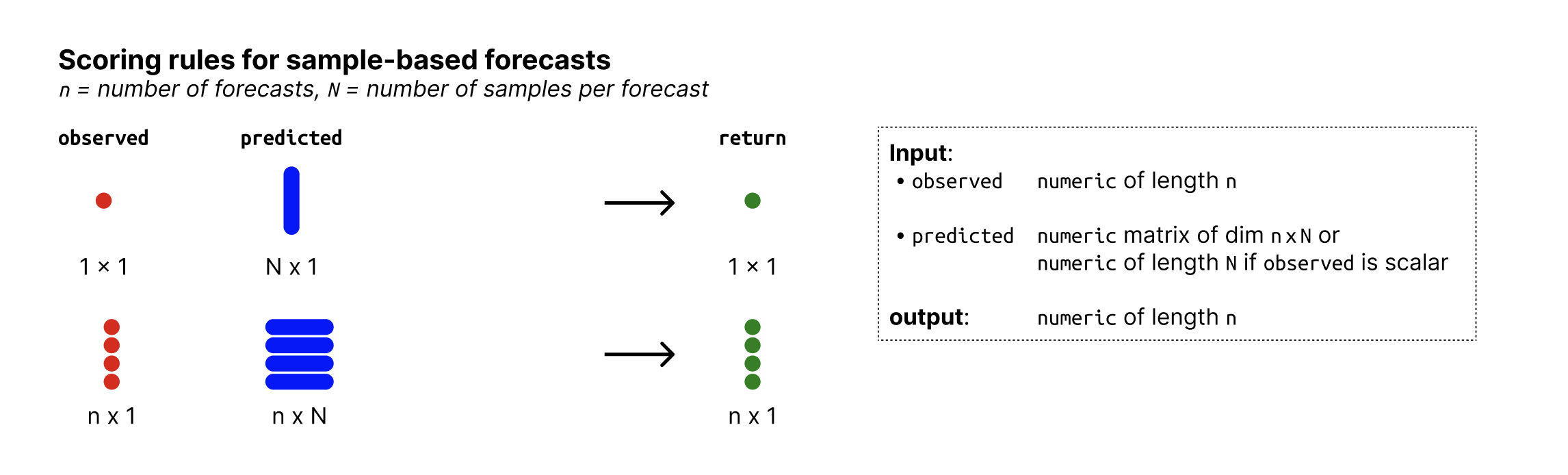

Assert that inputs are correct for sample-based forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring sample-based forecasts.

Usage

assert_input_sample(observed, predicted)

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

predicted |

Input to be checked. Should be a numeric nxN matrix of

predictive samples, n (number of rows) being the number of data points and

N (number of columns) the number of samples per forecast.

If |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Validate an object of class scores

Description

This function validates an object of class scores, checking

that it has the correct class and that it has a metrics attribute.

Usage

assert_scores(scores)

Arguments

scores |

A data.table or similar with scores as produced by |

Value

Returns NULL invisibly

Determines bias of quantile forecasts

Description

Determines bias from quantile forecasts. For an increasing number of quantiles this measure converges against the sample based bias version for integer and continuous forecasts.

Usage

bias_quantile(observed, predicted, quantile_level, na.rm = TRUE)

Arguments

observed |

Numeric vector of size n with the observed values. |

predicted |

Numeric nxN matrix of predictive

quantiles, n (number of rows) being the number of forecasts (corresponding

to the number of observed values) and N

(number of columns) the number of quantiles per forecast.

If |

quantile_level |

Vector of of size N with the quantile levels for which predictions were made. Note that if this does not contain the median (0.5) then the median is imputed as being the mean of the two innermost quantiles. |

na.rm |

Logical. Should missing values be removed? |

Details

For quantile forecasts, bias is measured as

B_t = (1 - 2 \cdot \max \{i | q_{t,i} \in Q_t \land q_{t,i} \leq x_t\})

\mathbf{1}( x_t \leq q_{t, 0.5}) \\

+ (1 - 2 \cdot \min \{i | q_{t,i} \in Q_t \land q_{t,i} \geq x_t\})

1( x_t \geq q_{t, 0.5}),

where Q_t is the set of quantiles that form the predictive

distribution at time t and x_t is the observed value. For

consistency, we define Q_t such that it always includes the element

q_{t, 0} = - \infty and q_{t,1} = \infty.

1() is the indicator function that is 1 if the

condition is satisfied and 0 otherwise.

In clearer terms, bias B_t is:

-

1 - 2 \cdotthe maximum percentile rank for which the corresponding quantile is still smaller than or equal to the observed value, if the observed value is smaller than the median of the predictive distribution. -

1 - 2 \cdotthe minimum percentile rank for which the corresponding quantile is still larger than or equal to the observed value if the observed value is larger than the median of the predictive distribution.. -

0if the observed value is exactly the median (both terms cancel out)

Bias can assume values between -1 and 1 and is 0 ideally (i.e. unbiased).

Note that if the given quantiles do not contain the median, the median is imputed as a linear interpolation of the two innermost quantiles. If the median is not available and cannot be imputed, an error will be thrown. Note that in order to compute bias, quantiles must be non-decreasing with increasing quantile levels.

For a large enough number of quantiles, the

percentile rank will equal the proportion of predictive samples below the

observed value, and the bias metric coincides with the one for

continuous forecasts (see bias_sample()).

Value

scalar with the quantile bias for a single quantile prediction

Input format

Overview of required input format for quantile-based forecasts

Examples

predicted <- matrix(c(1.5:23.5, 3.3:25.3), nrow = 2, byrow = TRUE)

quantile_level <- c(0.01, 0.025, seq(0.05, 0.95, 0.05), 0.975, 0.99)

observed <- c(15, 12.4)

bias_quantile(observed, predicted, quantile_level)

Compute bias for a single vector of quantile predictions

Description

Internal function to compute bias for a single observed value, a vector of predicted values and a vector of quantiles.

Usage

bias_quantile_single_vector(observed, predicted, quantile_level, na.rm)

Arguments

observed |

Scalar with the observed value. |

predicted |

Vector of length N (corresponding to the number of quantiles) that holds predictions. |

quantile_level |

Vector of of size N with the quantile levels for which predictions were made. Note that if this does not contain the median (0.5) then the median is imputed as being the mean of the two innermost quantiles. |

na.rm |

Logical. Should missing values be removed? |

Value

scalar with the quantile bias for a single quantile prediction

Determine bias of forecasts

Description

Determines bias from predictive Monte-Carlo samples. The function automatically recognises whether forecasts are continuous or integer valued and adapts the Bias function accordingly.

Usage

bias_sample(observed, predicted)

Arguments

observed |

A vector with observed values of size n |

predicted |

nxN matrix of predictive samples, n (number of rows) being

the number of data points and N (number of columns) the number of Monte

Carlo samples. Alternatively, |

Details

For continuous forecasts, Bias is measured as

B_t (P_t, x_t) = 1 - 2 * (P_t (x_t))

where P_t is the empirical cumulative distribution function of the

prediction for the observed value x_t. Computationally, P_t (x_t) is

just calculated as the fraction of predictive samples for x_t

that are smaller than x_t.

For integer valued forecasts, Bias is measured as

B_t (P_t, x_t) = 1 - (P_t (x_t) + P_t (x_t + 1))

to adjust for the integer nature of the forecasts.

In both cases, Bias can assume values between -1 and 1 and is 0 ideally.

Value

Numeric vector of length n with the biases of the predictive samples with respect to the observed values.

Input format

Overview of required input format for sample-based forecasts

References

The integer valued Bias function is discussed in Assessing the performance of real-time epidemic forecasts: A case study of Ebola in the Western Area region of Sierra Leone, 2014-15 Funk S, Camacho A, Kucharski AJ, Lowe R, Eggo RM, et al. (2019) Assessing the performance of real-time epidemic forecasts: A case study of Ebola in the Western Area region of Sierra Leone, 2014-15. PLOS Computational Biology 15(2): e1006785. doi:10.1371/journal.pcbi.1006785

Examples

## integer valued forecasts

observed <- rpois(30, lambda = 1:30)

predicted <- replicate(200, rpois(n = 30, lambda = 1:30))

bias_sample(observed, predicted)

## continuous forecasts

observed <- rnorm(30, mean = 1:30)

predicted <- replicate(200, rnorm(30, mean = 1:30))

bias_sample(observed, predicted)

Check column names are present in a data.frame

Description

The functions loops over the column names and checks whether they are present. If an issue is encountered, the function immediately stops and returns a message with the first issue encountered.

Usage

check_columns_present(data, columns)

Arguments

data |

A data.frame or similar to be checked |

columns |

A character vector of column names to check |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check Inputs Have Matching Dimensions

Description

Function assesses whether input dimensions match. In the following, n is the number of observations / forecasts. Scalar values may be repeated to match the length of the other input. Allowed options are therefore:

-

observedis vector of length 1 or length n -

predictedis:a vector of of length 1 or length n

a matrix with n rows and 1 column

Usage

check_dims_ok_point(observed, predicted)

Arguments

observed |

Input to be checked. Should be a factor of length n with

exactly two levels, holding the observed values.

The highest factor level is assumed to be the reference level. This means

that |

predicted |

Input to be checked. |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check that there are no duplicate forecasts

Description

Runs get_duplicate_forecasts() and returns a message if an issue is

encountered

Usage

check_duplicates(data)

Arguments

data |

A data.frame (or similar) with predicted and observed values. See the details section of for additional information on the required input format. |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check that inputs are correct for binary forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring binary forecasts.

Usage

check_input_binary(observed, predicted)

Arguments

observed |

Input to be checked. Should be a factor of length n with

exactly two levels, holding the observed values.

The highest factor level is assumed to be the reference level. This means

that |

predicted |

Input to be checked. |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check that inputs are correct for interval-based forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring interval-based forecasts.

Usage

check_input_interval(observed, lower, upper, interval_range)

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

lower |

Input to be checked. Should be a numeric vector of size n that holds the predicted value for the lower bounds of the prediction intervals. |

upper |

Input to be checked. Should be a numeric vector of size n that holds the predicted value for the upper bounds of the prediction intervals. |

interval_range |

Input to be checked. Should be a vector of size n that denotes the interval range in percent. E.g. a value of 50 denotes a (25%, 75%) prediction interval. |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check that inputs are correct for point forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring point forecasts.

Usage

check_input_point(observed, predicted)

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

predicted |

Input to be checked. Should be a numeric vector with the predicted values of size n. |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check that inputs are correct for quantile-based forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring quantile-based forecasts.

Usage

check_input_quantile(observed, predicted, quantile_level)

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

predicted |

Input to be checked. Should be nxN matrix of predictive

quantiles, n (number of rows) being the number of data points and N

(number of columns) the number of quantiles per forecast.

If |

quantile_level |

Input to be checked. Should be a vector of size N that denotes the quantile levels corresponding to the columns of the prediction matrix. |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check that inputs are correct for sample-based forecast

Description

Function assesses whether the inputs correspond to the requirements for scoring sample-based forecasts.

Usage

check_input_sample(observed, predicted)

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

predicted |

Input to be checked. Should be a numeric nxN matrix of

predictive samples, n (number of rows) being the number of data points and

N (number of columns) the number of samples per forecast.

If |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check that all forecasts have the same number of rows

Description

Helper function that checks the number of rows (corresponding e.g to quantiles or samples) per forecast. If the number of quantiles or samples is the same for all forecasts, it returns TRUE and a string with an error message otherwise.

Usage

check_number_per_forecast(data, forecast_unit)

Arguments

data |

A data.frame or similar to be checked |

forecast_unit |

Character vector denoting the unit of a single forecast. |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Check whether an input is an atomic vector of mode 'numeric'

Description

Helper function to check whether an input is a numeric vector.

Usage

check_numeric_vector(x, ...)

Arguments

x |

input to check |

... |

Arguments passed on to

|

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Helper function to convert assert statements into checks

Description

Tries to execute an expression. Internally, this is used to

see whether assertions fail when checking inputs (i.e. to convert an

assert_*() statement into a check). If the expression fails, the error

message is returned. If the expression succeeds, TRUE is returned.

Usage

check_try(expr)

Arguments

expr |

an expression to be evaluated |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Clean forecast object

Description

The function makes it possible to silently validate an object. In addition, it can return a copy of the data and remove rows with missing values.

Usage

clean_forecast(forecast, copy = FALSE, na.omit = FALSE)

Arguments

forecast |

A forecast object (a validated data.table with predicted and observed values). |

copy |

Logical, default is |

na.omit |

Logical, default is |

Compare a subset of common forecasts

Description

This function compares two comparators based on the subset of forecasts for which

both comparators have made a prediction. It gets called

from pairwise_comparison_one_group(), which handles the

comparison of multiple comparators on a single set of forecasts (there are no

subsets of forecasts to be distinguished). pairwise_comparison_one_group()

in turn gets called from from get_pairwise_comparisons() which can handle

pairwise comparisons for a set of forecasts with multiple subsets, e.g.

pairwise comparisons for one set of forecasts, but done separately for two

different forecast targets.

Usage

compare_forecasts(

scores,

compare = "model",

name_comparator1,

name_comparator2,

metric,

one_sided = FALSE,

test_type = c("non_parametric", "permutation", NULL),

n_permutations = 999

)

Arguments

scores |

An object of class |

compare |

Character vector with a single colum name that defines the elements for the pairwise comparison. For example, if this is set to "model" (the default), then elements of the "model" column will be compared. |

name_comparator1 |

Character, name of the first comparator |

name_comparator2 |

Character, name of the comparator to compare against |

metric |

A string with the name of the metric for which a relative skill shall be computed. By default this is either "crps", "wis" or "brier_score" if any of these are available. |

one_sided |

Boolean, default is |

test_type |

Character, either "non_parametric" (the default), "permutation", or NULL. This determines which kind of test shall be conducted to determine p-values. If NULL, no test will be conducted and p-values will be NA. |

n_permutations |

Numeric, the number of permutations for a permutation test. Default is 999. |

Value

A list with mean score ratios and p-values for the comparison between two comparators

Author(s)

Johannes Bracher, johannes.bracher@kit.edu

Nikos Bosse nikosbosse@gmail.com

(Continuous) ranked probability score

Description

Wrapper around the crps_sample()

function from the

scoringRules package. Can be used for continuous as well as integer

valued forecasts

The Continuous ranked probability score (CRPS) can be interpreted as the sum of three components: overprediction, underprediction and dispersion. "Dispersion" is defined as the CRPS of the median forecast $m$. If an observation $y$ is greater than $m$ then overprediction is defined as the CRPS of the forecast for $y$ minus the dispersion component, and underprediction is zero. If, on the other hand, $y<m$ then underprediction is defined as the CRPS of the forecast for $y$ minus the dispersion component, and overprediction is zero.

The overprediction, underprediction and dispersion components correspond to

those of the wis().

Usage

crps_sample(observed, predicted, separate_results = FALSE, ...)

dispersion_sample(observed, predicted, ...)

overprediction_sample(observed, predicted, ...)

underprediction_sample(observed, predicted, ...)

Arguments

observed |

A vector with observed values of size n |

predicted |

nxN matrix of predictive samples, n (number of rows) being

the number of data points and N (number of columns) the number of Monte

Carlo samples. Alternatively, |

separate_results |

Logical. If |

... |

Additional arguments passed on to |

Value

Vector with scores.

dispersion_sample(): a numeric vector with dispersion values (one per

observation).

overprediction_sample(): a numeric vector with overprediction values

(one per observation).

underprediction_sample(): a numeric vector with underprediction values (one per

observation).

Input format

Overview of required input format for sample-based forecasts

References

Alexander Jordan, Fabian Krüger, Sebastian Lerch, Evaluating Probabilistic Forecasts with scoringRules, https://www.jstatsoft.org/article/view/v090i12

Examples

observed <- rpois(30, lambda = 1:30)

predicted <- replicate(200, rpois(n = 30, lambda = 1:30))

crps_sample(observed, predicted)

Documentation template for assert functions

Description

Documentation template for assert functions

Arguments

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

Value

Returns NULL invisibly if the assertion was successful and throws an error otherwise.

Documentation template for check functions

Description

Documentation template for check functions

Arguments

data |

A data.frame or similar to be checked |

observed |

Input to be checked. Should be a numeric vector with the observed values of size n. |

columns |

A character vector of column names to check |

Value

Returns TRUE if the check was successful and a string with an error message otherwise.

Documentation template for test functions

Description

Documentation template for test functions

Value

Returns TRUE if the check was successful and FALSE otherwise

Dawid-Sebastiani score

Description

Wrapper around the dss_sample()

function from the

scoringRules package.

Usage

dss_sample(observed, predicted, ...)

Arguments

observed |

A vector with observed values of size n |

predicted |

nxN matrix of predictive samples, n (number of rows) being

the number of data points and N (number of columns) the number of Monte

Carlo samples. Alternatively, |

... |

Additional arguments passed to dss_sample() from the scoringRules package. |

Value

Vector with scores.

Input format

Overview of required input format for sample-based forecasts

References

Alexander Jordan, Fabian Krüger, Sebastian Lerch, Evaluating Probabilistic Forecasts with scoringRules, https://www.jstatsoft.org/article/view/v090i12

Examples

observed <- rpois(30, lambda = 1:30)

predicted <- replicate(200, rpois(n = 30, lambda = 1:30))

dss_sample(observed, predicted)

Ensure that an object is a data.table

Description

This function ensures that an object is a data table.

If the object is not a data table, it is converted to one. If the object

is a data table, a copy of the object is returned.

Usage

ensure_data.table(data)

Arguments

data |

An object to ensure is a data table. |

Value

A data.table/a copy of an existing data.table.

Binary forecast example data

Description

A data set with binary predictions for COVID-19 cases and deaths constructed from data submitted to the European Forecast Hub.

Usage

example_binary

Format

An object of class forecast_binary (see as_forecast_binary())

with the following columns:

- location

the country for which a prediction was made

- location_name

name of the country for which a prediction was made

- target_end_date

the date for which a prediction was made

- target_type

the target to be predicted (cases or deaths)

- observed

A factor with observed values

- forecast_date

the date on which a prediction was made

- model

name of the model that generated the forecasts

- horizon

forecast horizon in weeks

- predicted

predicted value

Details

Predictions in the data set were constructed based on the continuous example data by looking at the number of samples below the mean prediction. The outcome was constructed as whether or not the actually observed value was below or above that mean prediction. This should not be understood as sound statistical practice, but rather as a practical way to create an example data set.

The data was created using the script create-example-data.R in the inst/ folder (or the top level folder in a compiled package).

Source

Nominal example data

Description

A data set with predictions for COVID-19 cases and deaths submitted to the European Forecast Hub.

Usage

example_nominal

Format

An object of class forecast_nominal

(see as_forecast_nominal()) with the following columns:

- location

the country for which a prediction was made

- target_end_date

the date for which a prediction was made

- target_type

the target to be predicted (cases or deaths)

- observed

Numeric: observed values

- location_name

name of the country for which a prediction was made

- forecast_date

the date on which a prediction was made

- predicted_label

outcome that a probabilty corresponds to

- predicted

predicted value

- model

name of the model that generated the forecasts

- horizon

forecast horizon in weeks

Details

The data was created using the script create-example-data.R in the inst/ folder (or the top level folder in a compiled package).

Source

Ordinal example data

Description

A data set with predictions for COVID-19 cases and deaths submitted to the European Forecast Hub.

Usage

example_ordinal

Format

An object of class forecast_ordinal

(see as_forecast_ordinal()) with the following columns:

- location

the country for which a prediction was made

- target_end_date

the date for which a prediction was made

- target_type

the target to be predicted (cases or deaths)

- observed

Numeric: observed values

- location_name

name of the country for which a prediction was made

- forecast_date

the date on which a prediction was made

- predicted_label

outcome that a probabilty corresponds to

- predicted

predicted value

- model

name of the model that generated the forecasts

- horizon

forecast horizon in weeks

Details

The data was created using the script create-example-data.R in the inst/ folder (or the top level folder in a compiled package).

Source

Point forecast example data

Description

A data set with predictions for COVID-19 cases and deaths submitted to the European Forecast Hub. This data set is like the quantile example data, only that the median has been replaced by a point forecast.

Usage

example_point

Format

An object of class forecast_point (see as_forecast_point())

with the following columns:

- location

the country for which a prediction was made

- target_end_date

the date for which a prediction was made

- target_type

the target to be predicted (cases or deaths)

- observed

observed values

- location_name

name of the country for which a prediction was made

- forecast_date

the date on which a prediction was made

- predicted

predicted value

- model

name of the model that generated the forecasts

- horizon

forecast horizon in weeks

Details

The data was created using the script create-example-data.R in the inst/ folder (or the top level folder in a compiled package).

Source

Quantile example data

Description

A data set with predictions for COVID-19 cases and deaths submitted to the European Forecast Hub.

Usage

example_quantile

Format

An object of class forecast_quantile

(see as_forecast_quantile()) with the following columns:

- location

the country for which a prediction was made

- target_end_date

the date for which a prediction was made

- target_type

the target to be predicted (cases or deaths)

- observed

Numeric: observed values

- location_name

name of the country for which a prediction was made

- forecast_date

the date on which a prediction was made

- quantile_level

quantile level of the corresponding prediction

- predicted

predicted value

- model

name of the model that generated the forecasts

- horizon

forecast horizon in weeks

Details

The data was created using the script create-example-data.R in the inst/ folder (or the top level folder in a compiled package).

Source

Continuous forecast example data

Description

A data set with continuous predictions for COVID-19 cases and deaths constructed from data submitted to the European Forecast Hub.

Usage

example_sample_continuous

Format

An object of class forecast_sample (see as_forecast_sample())

with the following columns:

- location

the country for which a prediction was made

- target_end_date

the date for which a prediction was made

- target_type

the target to be predicted (cases or deaths)

- observed

observed values

- location_name

name of the country for which a prediction was made

- forecast_date

the date on which a prediction was made

- model

name of the model that generated the forecasts

- horizon

forecast horizon in weeks

- predicted

predicted value

- sample_id

id for the corresponding sample

Details

The data was created using the script create-example-data.R in the inst/ folder (or the top level folder in a compiled package).

Source

Discrete forecast example data

Description

A data set with integer predictions for COVID-19 cases and deaths constructed from data submitted to the European Forecast Hub.

Usage

example_sample_discrete

Format

An object of class forecast_sample (see as_forecast_sample())

with the following columns:

- location

the country for which a prediction was made

- target_end_date

the date for which a prediction was made

- target_type

the target to be predicted (cases or deaths)

- observed

observed values

- location_name

name of the country for which a prediction was made

- forecast_date

the date on which a prediction was made

- model

name of the model that generated the forecasts

- horizon

forecast horizon in weeks

- predicted

predicted value

- sample_id

id for the corresponding sample

Details

The data was created using the script create-example-data.R in the inst/ folder (or the top level folder in a compiled package).

Source

Documentation template for forecast types

Description

Documentation template for forecast types

Forecast unit

In order to score forecasts, scoringutils needs to know which of the rows

of the data belong together and jointly form a single forecasts. This is

easy e.g. for point forecast, where there is one row per forecast. For

quantile or sample-based forecasts, however, there are multiple rows that

belong to a single forecast.

The forecast unit or unit of a single forecast is then described by the

combination of columns that uniquely identify a single forecast.

For example, we could have forecasts made by different models in various

locations at different time points, each for several weeks into the future.

The forecast unit could then be described as

forecast_unit = c("model", "location", "forecast_date", "forecast_horizon").

scoringutils automatically tries to determine the unit of a single

forecast. It uses all existing columns for this, which means that no columns

must be present that are unrelated to the forecast unit. As a very simplistic

example, if you had an additional row, "even", that is one if the row number

is even and zero otherwise, then this would mess up scoring as scoringutils

then thinks that this column was relevant in defining the forecast unit.

In order to avoid issues, we recommend setting the forecast unit explicitly,

using the forecast_unit argument. This will simply drop unneeded columns,

while making sure that all necessary, 'protected columns' like "predicted"

or "observed" are retained.

Calculate geometric mean

Description

Calculate geometric mean

Usage

geometric_mean(x)

Arguments

x |

Numeric vector of values for which to calculate the geometric mean. |

Details

Used in get_pairwise_comparisons().

Value

The geometric mean of the values in x. NA values are ignored.

Calculate correlation between metrics

Description

Calculate the correlation between different metrics for a data.frame of

scores as produced by score().

Usage

get_correlations(scores, metrics = get_metrics.scores(scores), ...)

Arguments

scores |

An object of class |

metrics |

A character vector with the metrics to show. If set to

|

... |

Additional arguments to pass down to |

Value