| Type: | Package |

| Title: | Interface to 'dgpsi' for Deep and Linked Gaussian Process Emulations |

| Version: | 2.6.0 |

| Maintainer: | Deyu Ming <deyu.ming.16@ucl.ac.uk> |

| Description: | Interface to the 'python' package 'dgpsi' for Gaussian process, deep Gaussian process, and linked deep Gaussian process emulations of computer models and networks using stochastic imputation (SI). The implementations follow Ming & Guillas (2021) <doi:10.1137/20M1323771> and Ming, Williamson, & Guillas (2023) <doi:10.1080/00401706.2022.2124311> and Ming & Williamson (2023) <doi:10.48550/arXiv.2306.01212>. To get started with the package, see https://mingdeyu.github.io/dgpsi-R/. |

| License: | MIT + file LICENSE |

| URL: | https://github.com/mingdeyu/dgpsi-R, https://mingdeyu.github.io/dgpsi-R/ |

| BugReports: | https://github.com/mingdeyu/dgpsi-R/issues |

| Encoding: | UTF-8 |

| Depends: | R (≥ 4.0) |

| Imports: | reticulate (≥ 1.26), benchmarkme (≥ 1.0.8), utils, ggplot2, ggforce, reshape2, patchwork, lhs, methods, stats, clhs, dplyr, uuid, tidyr, rlang, lifecycle, magrittr, visNetwork, parallel, kableExtra |

| Suggests: | knitr, rmarkdown, MASS, R.utils, spelling |

| VignetteBuilder: | knitr |

| RoxygenNote: | 7.3.3 |

| Language: | en-US |

| NeedsCompilation: | no |

| Packaged: | 2025-10-15 21:05:44 UTC; deyu |

| Author: | Deyu Ming [aut, cre, cph], Daniel Williamson [aut] |

| Repository: | CRAN |

| Date/Publication: | 2025-10-15 21:20:02 UTC |

dgpsi: Interface to 'dgpsi' for Deep and Linked Gaussian Process Emulations

Description

Interface to the 'python' package 'dgpsi' for Gaussian process, deep Gaussian process, and linked deep Gaussian process emulations of computer models and networks using stochastic imputation (SI). The implementations follow Ming & Guillas (2021) doi:10.1137/20M1323771 and Ming, Williamson, & Guillas (2023) doi:10.1080/00401706.2022.2124311 and Ming & Williamson (2023) doi:10.48550/arXiv.2306.01212. To get started with the package, see https://mingdeyu.github.io/dgpsi-R/.

Author(s)

Maintainer: Deyu Ming deyu.ming.16@ucl.ac.uk [copyright holder]

Authors:

Daniel Williamson d.williamson@exeter.ac.uk

See Also

Useful links:

Report bugs at https://github.com/mingdeyu/dgpsi-R/issues

Locate the next design point(s) for a (D)GP emulator or a bundle of (D)GP emulators using Active Learning MacKay (ALM)

Description

This function searches from a candidate set to locate the next design point(s) to be added to a (D)GP emulator or a bundle of (D)GP emulators using the Active Learning MacKay (ALM) criterion (see the reference below).

Usage

alm(object, ...)

## S3 method for class 'gp'

alm(

object,

x_cand = NULL,

n_start = 20,

batch_size = 1,

M = 50,

workers = 1,

limits = NULL,

int = FALSE,

...

)

## S3 method for class 'dgp'

alm(

object,

x_cand = NULL,

n_start = 20,

batch_size = 1,

M = 50,

workers = 1,

limits = NULL,

int = FALSE,

aggregate = NULL,

...

)

## S3 method for class 'bundle'

alm(

object,

x_cand = NULL,

n_start = 20,

batch_size = 1,

M = 50,

workers = 1,

limits = NULL,

int = FALSE,

aggregate = NULL,

...

)

Arguments

object |

can be one of the following:

|

... |

any arguments (with names different from those of arguments used in |

x_cand |

a matrix (with each row being a design point and column being an input dimension) that gives a candidate set

from which the next design point(s) are determined. If |

n_start |

an integer that gives the number of initial design points to be used to determine next design point(s). This argument

is only used when |

batch_size |

an integer that gives the number of design points to be chosen. Defaults to |

M |

the size of the conditioning set for the Vecchia approximation in the criterion calculation. This argument is only used if the emulator |

workers |

the number of processes to be used for design point selection. If set to |

limits |

a two-column matrix that gives the ranges of each input dimension, or a vector of length two if there is only one input dimension.

If a vector is provided, it will be converted to a two-column row matrix. The rows of the matrix correspond to input dimensions, and its

first and second columns correspond to the minimum and maximum values of the input dimensions. This

argument is only used when |

int |

a bool or a vector of bools that indicates if an input dimension is an integer type. If a single bool is given, it will be applied to

all input dimensions. If a vector is provided, it should have a length equal to the input dimensions and will be applied to individual

input dimensions. This argument is only used when |

aggregate |

an R function that aggregates scores of the ALM across different output dimensions (if

Set to |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

If

x_candis notNULL:When

objectis an instance of thegpclass, a vector of lengthbatch_sizeis returned, containing the positions (row numbers) of the next design points fromx_cand.When

objectis an instance of thedgpclass, a vector of lengthbatch_size * Dis returned, containing the positions (row numbers) of the next design points fromx_candto be added to the DGP emulator.-

Dis the number of output dimensions of the DGP emulator if no likelihood layer is included. For a DGP emulator with a

HeteroorNegBinlikelihood layer,D = 2.For a DGP emulator with a

Categoricallikelihood layer,D = 1for binary output orD = Kfor multi-class output withKclasses.

-

When

objectis an instance of thebundleclass, a matrix is returned withbatch_sizerows and a column for each emulator in the bundle, containing the positions (row numbers) of the next design points fromx_candfor individual emulators.

If

x_candisNULL:When

objectis an instance of thegpclass, a matrix withbatch_sizerows is returned, giving the next design points to be evaluated.When

objectis an instance of thedgpclass, a matrix withbatch_size * Drows is returned, where:-

Dis the number of output dimensions of the DGP emulator if no likelihood layer is included. For a DGP emulator with a

HeteroorNegBinlikelihood layer,D = 2.For a DGP emulator with a

Categoricallikelihood layer,D = 1for binary output orD = Kfor multi-class output withKclasses.

-

When

objectis an instance of thebundleclass, a list is returned with a length equal to the number of emulators in the bundle. Each element of the list is a matrix withbatch_sizerows, where each row represents a design point to be added to the corresponding emulator.

Note

The first column of the matrix supplied to the first argument of aggregate must correspond to the first output dimension of the DGP emulator

if object is an instance of the dgp class, and so on for subsequent columns and dimensions. If object is an instance of the bundle class,

the first column must correspond to the first emulator in the bundle, and so on for subsequent columns and emulators.

References

MacKay, D. J. (1992). Information-based objective functions for active data selection. Neural Computation, 4(4), 590-604.

Examples

## Not run:

# load packages and the Python env

library(lhs)

library(dgpsi)

# construct a 1D non-stationary function

f <- function(x) {

sin(30*((2*x-1)/2-0.4)^5)*cos(20*((2*x-1)/2-0.4))

}

# generate the initial design

X <- maximinLHS(10,1)

Y <- f(X)

# training a 2-layered DGP emulator with the global connection off

m <- dgp(X, Y, connect = F)

# specify the input range

lim <- c(0,1)

# locate the next design point using ALM

X_new <- alm(m, limits = lim)

# obtain the corresponding output at the located design point

Y_new <- f(X_new)

# combine the new input-output pair to the existing data

X <- rbind(X, X_new)

Y <- rbind(Y, Y_new)

# update the DGP emulator with the new input and output data and refit

m <- update(m, X, Y, refit = TRUE)

# plot the LOO validation

plot(m)

## End(Not run)

Continue training a DGP emulator

Description

This function implements additional training iterations for a DGP emulator.

Usage

continue(

object,

N = NULL,

cores = 1,

ess_burn = 10,

verb = TRUE,

burnin = NULL,

B = NULL

)

Arguments

object |

an instance of the |

N |

additional number of iterations to train the DGP emulator. If set to |

cores |

the number of processes to be used to optimize GP components (in the same layer) at each M-step of the training. If set to |

ess_burn |

number of burnin steps for ESS-within-Gibbs

at each I-step of the training. Defaults to |

verb |

a bool indicating if a progress bar will be printed during training. Defaults to |

burnin |

the number of training iterations to be discarded for

point estimates calculation. Must be smaller than the overall training iterations

so-far implemented. If this is not specified, only the last 25% of iterations

are used. This overrides the value of |

B |

the number of imputations to produce predictions. Increase the value to account for

more imputation uncertainty. This overrides the value of |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An updated object.

Note

One can also use this function to fit an untrained DGP emulator constructed by

dgp()withtraining = FALSE.The following slots:

-

looandooscreated byvalidate(); and -

resultscreated bypredict()inobjectwill be removed and not contained in the returned object.

-

Examples

## Not run:

# See dgp() for an example.

## End(Not run)

Restore the serialized emulator

Description

This function restores the serialized emulator created by serialize().

Usage

deserialize(object)

Arguments

object |

the serialized object of an emulator. |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

The S3 class of a GP emulator, a DGP emulator, a linked (D)GP emulator, or a bundle of (D)GP emulators.

Note

See the Note section in serialize().

Examples

## Not run:

# See serialize() for an example.

## End(Not run)

Sequential design of a (D)GP emulator or a bundle of (D)GP emulators

Description

This function implements sequential design and active learning for a (D)GP emulator or a bundle of (D)GP emulators, supporting an array of popular methods as well as user-specified approaches. It can also be used as a wrapper for Bayesian optimization methods.

Usage

design(

object,

N,

x_cand,

y_cand,

n_sample,

limits,

f,

reps,

freq,

x_test,

y_test,

reset,

target,

method,

batch_size,

eval,

verb,

autosave,

new_wave,

M_val,

cores,

...

)

## S3 method for class 'gp'

design(

object,

N,

x_cand = NULL,

y_cand = NULL,

n_sample = 200,

limits = NULL,

f = NULL,

reps = 1,

freq = c(1, 1),

x_test = NULL,

y_test = NULL,

reset = FALSE,

target = NULL,

method = vigf,

batch_size = 1,

eval = NULL,

verb = TRUE,

autosave = list(),

new_wave = TRUE,

M_val = 50,

cores = 1,

...

)

## S3 method for class 'dgp'

design(

object,

N,

x_cand = NULL,

y_cand = NULL,

n_sample = 200,

limits = NULL,

f = NULL,

reps = 1,

freq = c(1, 1),

x_test = NULL,

y_test = NULL,

reset = FALSE,

target = NULL,

method = vigf,

batch_size = 1,

eval = NULL,

verb = TRUE,

autosave = list(),

new_wave = TRUE,

M_val = 50,

cores = 1,

train_N = NULL,

refit_cores = 1,

pruning = TRUE,

control = list(),

...

)

## S3 method for class 'bundle'

design(

object,

N,

x_cand = NULL,

y_cand = NULL,

n_sample = 200,

limits = NULL,

f = NULL,

reps = 1,

freq = c(1, 1),

x_test = NULL,

y_test = NULL,

reset = FALSE,

target = NULL,

method = vigf,

batch_size = 1,

eval = NULL,

verb = TRUE,

autosave = list(),

new_wave = TRUE,

M_val = 50,

cores = 1,

train_N = NULL,

refit_cores = 1,

...

)

Arguments

object |

can be one of the following:

|

N |

the number of iterations for the sequential design. |

x_cand |

a matrix (with each row being a design point and column being an input dimension) that gives a candidate set

from which the next design points are determined. Defaults to |

y_cand |

a matrix (with each row being a simulator evaluation and column being an output dimension) that gives the realizations

from the simulator at input positions in |

n_sample |

an integer that gives the size of a sub-set to be sampled from the candidate set Defaults to |

limits |

a two-column matrix that gives the ranges of each input dimension, or a vector of length two if there is only one

input dimension. If a vector is provided, it will be converted to a two-column row matrix. The rows of the matrix correspond to input

dimensions, and its first and second columns correspond to the minimum and maximum values of the input dimensions. Set

|

f |

an R function representing the simulator.

See the Note section below for additional details. This argument is required and must be supplied when |

reps |

an integer that gives the number of repetitions of the located design points to be created and used for evaluations of |

freq |

a vector of two integers with the first element indicating the number of iterations taken between re-estimating

the emulator hyperparameters, and the second element defining the number of iterations to take between re-calculation of evaluating metrics

on the validation set (see |

x_test |

a matrix (with each row being an input testing data point and each column being an input dimension) that gives the testing

input data to evaluate the emulator after each |

y_test |

the testing output data corresponding to

Set to |

reset |

A bool or a vector of bools indicating whether to reset the hyperparameters of the emulator(s) to their initial values (as set during initial construction) before re-fitting.

The re-fitting occurs based on the frequency specified by

Defaults to |

target |

a number or vector specifying the target evaluation metric value(s) at which the sequential design should terminate.

Defaults to |

method |

an R function that determines the next design points to be evaluated by

See |

batch_size |

an integer specifying the number of design points to select in a single iteration. Defaults to |

eval |

an R function that computes a customized metric for evaluating emulator performance. The function must adhere to the following rules:

If no custom function is provided, a built-in evaluation metric (RMSE or log-loss, in the case of DGP emulators with categorical likelihoods) will be used.

Defaults to |

verb |

a bool indicating if trace information will be printed during the sequential design.

Defaults to |

autosave |

a list that contains configuration settings for the automatic saving of the emulator:

|

new_wave |

a bool indicating whether the current call to |

M_val |

an integer that gives the size of the conditioning set for the Vecchia approximation in emulator validations. This argument is only used if the emulator |

cores |

an integer that gives the number of processes to be used for emulator validation. If set to |

... |

Any arguments with names that differ from those used in |

train_N |

the number of training iterations to be used for re-fitting the DGP emulator at each step of the sequential design:

Defaults to |

refit_cores |

the number of processes to be used to re-fit GP components (in the same layer of a DGP emulator)

at each M-step during the re-fitting. If set to |

pruning |

a bool indicating if dynamic pruning of DGP structures will be implemented during the sequential design after the total number of

design points exceeds |

control |

a list that can supply any of the following components to control the dynamic pruning of the DGP emulator:

The argument is only used when |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An updated object is returned with a slot called design that contains:

-

S slots, named

wave1, wave2,..., waveS, that contain information of S waves of sequential design that have been applied to the emulator. Each slot contains the following elements:-

N, an integer that gives the numbers of iterations implemented in the corresponding wave; -

rmse, a matrix providing the evaluation metric values for emulators constructed during the corresponding wave, wheneval = NULL. Each row of the matrix represents an iteration.for an

objectof classgp, the matrix contains a single column of RMSE values.for an

objectof classdgpwithout a categorical likelihood, each row contains mean/median squared errors corresponding to different output dimensions.for an

objectof classdgpwith a categorical likelihood, the matrix contains a single column of log-loss values.for an

objectof classbundle, each row contains either mean/median squared errors or log-loss values for the emulators in the bundle.

-

metric: a matrix providing the values of custom evaluation metrics, as computed by the user-suppliedevalfunction, for emulators constructed during the corresponding wave. -

freq, an integer that gives the frequency that the emulator validations are implemented during the corresponding wave. -

enrichment, a vector of sizeNthat gives the number of new design points added after each step of the sequential design (ifobjectis an instance of thegpordgpclass), or a matrix that gives the number of new design points added to emulators in a bundle after each step of the sequential design (ifobjectis an instance of thebundleclass).

If

targetis notNULL, the following additional elements are also included:-

target: the target evaluating metric computed by theevalor built-in function to stop the sequential design. -

reached: indicates whether thetargetwas reached at the end of the sequential design:a bool if

objectis an instance of thegpordgpclass.a vector of bools if

objectis an instance of thebundleclass, with its length determined as follows:equal to the number of emulators in the bundle when

eval = NULL.equal to the length of the output from

evalwhen a customevalfunction is provided.

-

a slot called

typethat gives the type of validation:either LOO ('loo') or OOS ('oos') if

eval = NULL. Seevalidate()for more information about LOO and OOS.'customized' if a customized R function is provided to

eval.

two slots called

x_testandy_testthat contain the data points for the OOS validation if thetypeslot is 'oos'.If

y_cand = NULLandx_candis supplied, and there areNAs returned from the suppliedfduring the sequential design, a slot calledexclusionis included that records the located design positions that producedNAs viaf. The sequential design will use this information to avoid re-visiting the same locations in later runs ofdesign().

See Note section below for further information.

Note

Validation of an emulator is forced after the final step of a sequential design even if

Nis not a multiple of the second element infreq.Any

loooroosslot that already exists inobjectwill be cleaned, and a new slot calledlooorooswill be created in the returned object depending on whetherx_testandy_testare provided. The new slot gives the validation information of the emulator constructed in the final step of the sequential design. Seevalidate()for more information about the slotslooandoos.If

objecthas previously been used bydesign()for sequential design, the information of the current wave of the sequential design will replace those of old waves and be contained in the returned object, unlessthe validation type (LOO or OOS depending on whether

x_testandy_testare supplied or not) of the current wave of the sequential design is the same as the validation types (shown in thetypeof thedesignslot ofobject) in previous waves, and if the validation type is OOS,x_testandy_testin the current wave must also be identical to those in the previous waves;both the current and previous waves of the sequential design supply customized evaluation functions to

eval. Users need to ensure the customized evaluation functions are consistent among different waves. Otherwise, the trace plot of RMSEs produced bydraw()will show values of different evaluation metrics in different waves.

For the above two cases, the information of the current wave of the sequential design will be added to the

designslot of the returned object under the namewaveS.If

objectis an instance of thegpclass andeval = NULL, the matrix in thermseslot is single-columned. Ifobjectis an instance of thedgporbundleclass andeval = NULL, the matrix in thermseslot can have multiple columns that correspond to different output dimensions or different emulators in the bundle.If

objectis an instance of thegpclass andeval = NULL,targetneeds to be a single value giving the RMSE threshold. Ifobjectis an instance of thedgporbundleclass andeval = NULL,targetcan be a vector of values that gives the thresholds of evaluating metrics for different output dimensions or different emulators. If a single value is provided, it will be used as the threshold for all output dimensions (ifobjectis an instance of thedgp) or all emulators (ifobjectis an instance of thebundle). If a customized function is supplied toevalandtargetis given as a vector, the user needs to ensure that the length oftargetis equal to that of the output fromeval.When defining

f, it is important to ensure that:the column order of the first argument of

fis consistent with the training input used for the emulator;the column order of the output matrix of

fis consistent with the order of emulator output dimensions (ifobjectis an instance of thedgpclass), or the order of emulators placed inobject(ifobjectis an instance of thebundleclass).

The output matrix produced by

fmay includeNAs. This is especially beneficial as it allows the sequential design process to continue without interruption, even if errors orNAoutputs are encountered fromfat certain input locations identified by the sequential design. Users should ensure that any errors withinfare handled by appropriately returningNAs.When defining

eval, the output metric needs to be positive ifdraw()is used withlog = T. And one needs to ensure that a lower metric value indicates a better emulation performance iftargetis set.

Examples

## Not run:

# load packages and the Python env

library(lhs)

library(dgpsi)

# construct a 2D non-stationary function that takes a matrix as the input

f <- function(x) {

sin(1/((0.7*x[,1,drop=F]+0.3)*(0.7*x[,2,drop=F]+0.3)))

}

# generate the initial design

X <- maximinLHS(5,2)

Y <- f(X)

# generate the validation data

validate_x <- maximinLHS(30,2)

validate_y <- f(validate_x)

# training a 2-layered DGP emulator with the initial design

m <- dgp(X, Y)

# specify the ranges of the input dimensions

lim_1 <- c(0, 1)

lim_2 <- c(0, 1)

lim <- rbind(lim_1, lim_2)

# 1st wave of the sequential design with 10 steps

m <- design(m, N=10, limits = lim, f = f, x_test = validate_x, y_test = validate_y)

# 2nd wave of the sequential design with 10 steps

m <- design(m, N=10, limits = lim, f = f, x_test = validate_x, y_test = validate_y)

# 3rd wave of the sequential design with 10 steps

m <- design(m, N=10, limits = lim, f = f, x_test = validate_x, y_test = validate_y)

# draw the design created by the sequential design

draw(m,'design')

# inspect the trace of RMSEs during the sequential design

draw(m,'rmse')

# reduce the number of imputations for faster OOS

m_faster <- set_imp(m, 5)

# plot the OOS validation with the faster DGP emulator

plot(m_faster, x_test = validate_x, y_test = validate_y)

## End(Not run)

Deep Gaussian process emulator construction

Description

This function builds and trains a DGP emulator.

Usage

dgp(

X,

Y,

depth = 2,

node = ncol(X),

name = "sexp",

lengthscale = 1,

bounds = NULL,

prior = "ga",

share = TRUE,

nugget_est = FALSE,

nugget = NULL,

scale_est = TRUE,

scale = 1,

connect = NULL,

likelihood = NULL,

training = TRUE,

verb = TRUE,

check_rep = TRUE,

vecchia = FALSE,

M = 25,

ord = NULL,

N = ifelse(vecchia, 200, 500),

cores = 1,

blocked_gibbs = TRUE,

ess_burn = 10,

burnin = NULL,

B = 10,

id = NULL,

decouple = FALSE,

link = "logit"

)

Arguments

X |

a matrix where each row is an input training data point and each column represents an input dimension. |

Y |

a matrix containing observed training output data. The matrix has its rows being output data points and columns representing

output dimensions. When |

depth |

number of layers (including the likelihood layer) for a DGP structure. |

node |

number of GP nodes in each layer (except for the final layer or the layer feeding the likelihood node) of the DGP. Defaults to

|

name |

a character or a vector of characters that indicates the kernel functions (either

Defaults to |

lengthscale |

initial lengthscales for GP nodes in the DGP emulator. It can be a single numeric value or a vector:

Defaults to a numeric value of |

bounds |

the lower and upper bounds of lengthscales in GP nodes. It can be a vector or a matrix:

Defaults to |

prior |

prior to be used for MAP estimation of lengthscales and nuggets of all GP nodes in the DGP hierarchy:

Defaults to |

share |

a bool indicating if all input dimensions of a GP node share a common lengthscale. Defaults to |

nugget_est |

Each element of the vector is applied to the corresponding GP node in the final layer (or the layer feeding the likelihood node). The value of a bool has following effects:

Defaults to |

nugget |

the initial nugget value(s) of GP nodes (if any) in each layer:

Set |

scale_est |

a bool or a bool vector indicating whether the variances of GP nodes in the final layer (or the layer feeding the likelihood node) should be estimated. If a bool is provided, it is applied to all GP nodes in that layer. If a bool vector is provided, its length must match the number of GP nodes:

The value of a bool has following effects:

Defaults to |

scale |

the initial variance value(s) of GP nodes in the final layer (or the layer feeding the likelihood node). If it is a single numeric value, it will be applied to all GP nodes in the final layer (or the layer feeding the likelihood node). If it is a vector, its length must match the number of GP nodes:

Each numeric in the vector will be applied to the corresponding GP node. Defaults to |

connect |

a bool indicating whether to apply global input connections in the DGP structure. Setting this to |

likelihood |

the likelihood type of a DGP emulator:

Defaults to |

training |

a bool indicating if the initialized DGP emulator will be trained.

When set to |

verb |

a bool indicating if the trace information on DGP emulator construction and training will be printed during the function execution.

Defaults to |

check_rep |

a bool indicating whether to check for repetitions in the dataset, i.e., if one input

position has multiple outputs. Defaults to |

vecchia |

a bool indicating whether to use Vecchia approximation for large-scale DGP emulator construction and prediction. Defaults to |

M |

the size of the conditioning set for the Vecchia approximation in the DGP emulator training. Defaults to |

ord |

an R function that returns the ordering of the input to each GP node contained in the DGP emulator for the Vecchia approximation. The function must satisfy the following basic rules:

If |

N |

number of iterations for the training. Defaults to |

cores |

the number of processes to be used to optimize GP components (in the same layer) at each M-step of the training. If set to |

blocked_gibbs |

a bool indicating if the latent variables are imputed layer-wise using ESS-within-Blocked-Gibbs. ESS-within-Blocked-Gibbs would be faster and

more efficient than ESS-within-Gibbs that imputes latent variables node-wise because it reduces the number of components to be sampled during Gibbs steps,

especially when there is a large number of GP nodes in layers due to higher input dimensions. Default to |

ess_burn |

number of burnin steps for the ESS-within-Gibbs

at each I-step of the training. Defaults to |

burnin |

the number of training iterations to be discarded for

point estimates of model parameters. Must be smaller than the training iterations |

B |

the number of imputations used to produce predictions. Increase the value to refine the representation of imputation uncertainty.

Defaults to |

id |

an ID to be assigned to the DGP emulator. If an ID is not provided (i.e., |

decouple |

|

link |

|

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An S3 class named dgp that contains five slots:

-

id: A number or character string assigned through theidargument. -

data: a list that contains two elements:XandYwhich are the training input and output data respectively. -

specs: a list that contains-

L (i.e., the number of layers in the DGP hierarchy) sub-lists named

layer1, layer2,..., layerL. Each sub-list contains D (i.e., the number of GP/likelihood nodes in the corresponding layer) sub-lists namednode1, node2,..., nodeD. If a sub-list corresponds to a likelihood node, it contains one element calledtypethat gives the name (Hetero,Poisson,NegBin, orCategorical) of the likelihood node. If a sub-list corresponds to a GP node, it contains four elements:-

kernel: the type of the kernel function used for the GP node. -

lengthscales: a vector of lengthscales in the kernel function. -

scale: the variance value in the kernel function. -

nugget: the nugget value in the kernel function.

-

-

seed: the random seed generated to produce imputations. This information is stored for reproducibility when the DGP emulator (that was saved bywrite()with the light optionlight = TRUE) is loaded back to R byread(). -

B: the number of imputations used to generate the emulator. -

vecchia: whether the Vecchia approximation is used for the GP emulator training. -

M: the size of the conditioning set for the Vecchia approximation in the DGP emulator training.Mis generated only whenvecchia = TRUE.

-

-

constructor_obj: a 'python' object that stores the information of the constructed DGP emulator. -

container_obj: a 'python' object that stores the information for the linked emulation. -

emulator_obj: a 'python' object that stores the information for the predictions from the DGP emulator.

The returned dgp object can be used by

-

predict()for DGP predictions. -

continue()for additional DGP training iterations. -

validate()for LOO and OOS validations. -

plot()for validation plots. -

lgp()for linked (D)GP emulator constructions. -

window()for model parameter trimming. -

summary()to summarize the trained DGP emulator. -

write()to save the DGP emulator to a.pklfile. -

set_imp()to change the number of imputations. -

design()for sequential design. -

update()to update the DGP emulator with new inputs and outputs.

Note

Any R vector detected in X and Y will be treated as a column vector and automatically converted into a single-column

R matrix. Thus, if X is a single data point with multiple dimensions, it must be given as a matrix.

Examples

## Not run:

# load the package and the Python env

library(dgpsi)

# construct a step function

f <- function(x) {

if (x < 0.5) return(-1)

if (x >= 0.5) return(1)

}

# generate training data

X <- seq(0, 1, length = 10)

Y <- sapply(X, f)

# set a random seed

set_seed(999)

# training a DGP emulator

m <- dgp(X, Y)

# continue for further training iterations

m <- continue(m)

# summarizing

summary(m)

# trace plot

trace_plot(m)

# trim the traces of model parameters

m <- window(m, 800)

# LOO cross validation

m <- validate(m)

plot(m)

# prediction

test_x <- seq(0, 1, length = 200)

m <- predict(m, x = test_x)

# OOS validation

validate_x <- sample(test_x, 10)

validate_y <- sapply(validate_x, f)

plot(m, validate_x, validate_y)

# write and read the constructed emulator

write(m, 'step_dgp')

m <- read('step_dgp')

## End(Not run)

Validation and diagnostic plots for a sequential design

Description

This function draws diagnostic and validation plots for a sequential design of a (D)GP emulator or a bundle of (D)GP emulators.

Usage

draw(object, ...)

## S3 method for class 'gp'

draw(object, type = "rmse", log = FALSE, ...)

## S3 method for class 'dgp'

draw(object, type = "rmse", log = FALSE, ...)

## S3 method for class 'bundle'

draw(object, type = "rmse", log = FALSE, emulator = NULL, ...)

Arguments

object |

can be one of the following emulator classes:

|

... |

N/A. |

type |

specifies the type of plot or visualization to generate:

Defaults to |

log |

a bool indicating whether to plot RMSEs, log-losses (for DGP emulators with categorical likelihoods), or custom evaluation metrics on a log scale when |

emulator |

an index or vector of indices of emulators packed in |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

A patchwork object.

Examples

## Not run:

# See design() for an example.

## End(Not run)

Get the number of threads

Description

This function gets the number of threads used for parallel computations involved in the package.

Usage

get_thread_num()

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

the number of threads.

Gaussian process emulator construction

Description

This function builds and trains a GP emulator.

Usage

gp(

X,

Y,

name = "sexp",

lengthscale = rep(0.1, ncol(X)),

bounds = NULL,

prior = "ref",

nugget_est = FALSE,

nugget = ifelse(nugget_est, 0.01, 1e-08),

scale_est = TRUE,

scale = 1,

training = TRUE,

verb = TRUE,

check_rep = TRUE,

vecchia = FALSE,

M = 25,

ord = NULL,

id = NULL

)

Arguments

X |

a matrix where each row is an input data point and each column is an input dimension. |

Y |

a matrix with only one column and each row being an output data point. |

name |

kernel function to be used. Either |

lengthscale |

initial values of lengthscales in the kernel function. It can be a single numeric value or a vector of length

Defaults to a vector of |

bounds |

the lower and upper bounds of lengthscales in the kernel function. It is a vector of length two where the first element is

the lower bound and the second element is the upper bound. The bounds will be applied to all lengthscales in the kernel function. Defaults

to |

prior |

prior to be used for Maximum a Posterior for lengthscales and nugget of the GP: gamma prior ( |

nugget_est |

a bool indicating if the nugget term is to be estimated:

Defaults to |

nugget |

the initial nugget value. If |

scale_est |

a bool indicating if the variance is to be estimated:

Defaults to |

scale |

the initial variance value. If |

training |

a bool indicating if the initialized GP emulator will be trained.

When set to |

verb |

a bool indicating if the trace information on GP emulator construction and training will be printed during function execution.

Defaults to |

check_rep |

|

vecchia |

a bool indicating whether to use Vecchia approximation for large-scale GP emulator construction and prediction. Defaults to |

M |

the size of the conditioning set for the Vecchia approximation in the GP emulator training. Defaults to |

ord |

an R function that returns the ordering of the input to the GP emulator for the Vecchia approximation. The function must satisfy the following basic rules:

If |

id |

an ID to be assigned to the GP emulator. If an ID is not provided (i.e., |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An S3 class named gp that contains five slots:

-

id: A number or character string assigned through theidargument. -

data: a list that contains two elements:XandYwhich are the training input and output data respectively. -

specs: a list that contains seven elements:-

kernel: the type of the kernel function used. Either"sexp"for squared exponential kernel or"matern2.5"for Matérn-2.5 kernel. -

lengthscales: a vector of lengthscales in the kernel function. -

scale: the variance value in the kernel function. -

nugget: the nugget value in the kernel function. -

vecchia: whether the Vecchia approximation is used for the GP emulator training. -

M: the size of the conditioning set for the Vecchia approximation in the GP emulator training.

-

-

constructor_obj: a 'python' object that stores the information of the constructed GP emulator. -

container_obj: a 'python' object that stores the information for the linked emulation. -

emulator_obj: a 'python' object that stores the information for the predictions from the GP emulator.

The returned gp object can be used by

-

predict()for GP predictions. -

validate()for LOO and OOS validations. -

plot()for validation plots. -

lgp()for linked (D)GP emulator constructions. -

summary()to summarize the trained GP emulator. -

write()to save the GP emulator to a.pklfile. -

design()for sequential designs. -

update()to update the GP emulator with new inputs and outputs.

Note

Any R vector detected in X and Y will be treated as a column vector and automatically converted into a single-column

R matrix. Thus, if X is a single data point with multiple dimensions, it must be given as a matrix.

References

Gu, M. (2019). Jointly robust prior for Gaussian stochastic process in emulation, calibration and variable selection. Bayesian Analysis, 14(3), 857-885.

Katzfuss, M., Guinness, J., & Lawrence, E. (2022). Scaled Vecchia approximation for fast computer-model emulation. SIAM/ASA Journal on Uncertainty Quantification, 10(2), 537-554.

Examples

## Not run:

# load the package and the Python env

library(dgpsi)

# construct a step function

f <- function(x) {

if (x < 0.5) return(-1)

if (x >= 0.5) return(1)

}

# generate training data

X <- seq(0, 1, length = 10)

Y <- sapply(X, f)

# training

m <- gp(X, Y)

# summarizing

summary(m)

# LOO cross validation

m <- validate(m)

plot(m)

# prediction

test_x <- seq(0, 1, length = 200)

m <- predict(m, x = test_x)

# OOS validation

validate_x <- sample(test_x, 10)

validate_y <- sapply(validate_x, f)

plot(m, validate_x, validate_y)

# write and read the constructed emulator

write(m, 'step_gp')

m <- read('step_gp')

## End(Not run)

'python' environment initialization

Description

This function initializes the 'python' environment for the package.

Usage

init_py(

py_ver = NULL,

dgpsi_ver = NULL,

reinstall = FALSE,

uninstall = FALSE,

verb = TRUE

)

Arguments

py_ver |

a string that gives the 'python' version to be installed. Supported versions are 3.10, 3.11, and 3.12.

If |

dgpsi_ver |

a string that gives the 'python' version of 'dgpsi' to be used. If

|

reinstall |

a bool that indicates whether to reinstall the 'python' version of 'dgpsi' specified

in |

uninstall |

a bool that indicates whether to uninstall the 'python' version of 'dgpsi' specified

in |

verb |

a bool indicating if trace information will be printed during function execution.

Defaults to |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

No return value, called to install required 'python' environment.

Examples

## Not run:

# See gp(), dgp(), or lgp() for an example.

## End(Not run)

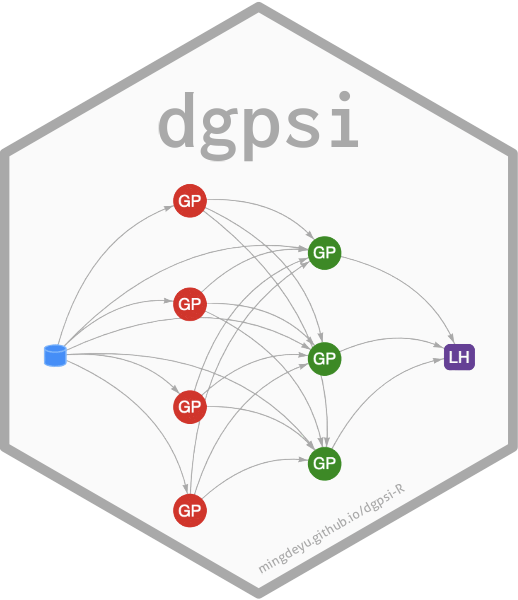

Linked (D)GP emulator construction

Description

This function constructs a linked (D)GP emulator for a model chain or network.

Usage

lgp(struc, emulators, B = 10, activate = TRUE, verb = TRUE, id = NULL)

Arguments

struc |

a data frame that defines the connection structure between emulators in the linked system, with the following columns:

Each row represents a single one-to-one connection between a specified output dimension of |

emulators |

a list of emulator objects, each containing an If the same emulator is used multiple times within the linked system, the list must contain distinct copies

of that emulator, each with a unique ID stored in their |

B |

the number of imputations used for prediction. Increase the value to refine representation of

imputation uncertainty. If the system consists of only GP emulators, |

activate |

a bool indicating whether the initialized linked emulator should be activated:

Defaults to |

verb |

a bool indicating if the trace information on linked (D)GP emulator construction should be printed during the function call.

Defaults to |

id |

an ID to be assigned to the linked (D)GP emulator. If an ID is not provided (i.e., |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An S3 class named lgp that contains three slots:

-

id: A number or character string assigned through theidargument. -

constructor_obj: a list of 'python' objects that stores the information of the constructed linked emulator. -

emulator_obj, a 'python' object that stores the information for predictions from the linked emulator. -

specs: a list that contains-

seed: the random seed generated to produce the imputations. This information is stored for reproducibility when the linked (D)GP emulator (that was saved bywrite()with the light optionlight = TRUE) is loaded back to R byread(). -

B: the number of imputations used to generate the linked (D)GP emulator. -

metadata: a data frame providing configuration details for each emulator in the linked system, with following columns:-

Emulator: the ID of an emulator. -

Layer: the layer in the linked system where the emulator is positioned. A lowerLayernumber indicates a position closer to the input, with layer numbering increasing as you move away from the input. -

Pos_in_Layer: the position of the emulator within its layer. A lowerPos_in_Layernumber indicates a position higher up in that layer. -

Total_Input_Dims: the total number of input dimensions of the emulator. -

Total_Output_Dims: the total number of output dimensions of the emulator.

-

-

struc: The linked system structure, as supplied bystruc.

-

The returned lgp object can be used by

-

predict()for linked (D)GP predictions. -

validate()for OOS validation. -

plot()for validation plots. -

summary()to summarize the constructed linked (D)GP emulator. -

write()to save the linked (D)GP emulator to a.pklfile.

Examples

## Not run:

# load the package and the Python env

library(dgpsi)

# model 1

f1 <- function(x) {

(sin(7.5*x)+1)/2

}

# model 2

f2 <- function(x) {

2/3*sin(2*(2*x - 1))+4/3*exp(-30*(2*(2*x-1))^2)-1/3

}

# linked model

f12 <- function(x) {

f2(f1(x))

}

# training data for Model 1

X1 <- seq(0, 1, length = 9)

Y1 <- sapply(X1, f1)

# training data for Model 2

X2 <- seq(0, 1, length = 13)

Y2 <- sapply(X2, f2)

# emulation of model 1

m1 <- gp(X1, Y1, name = "matern2.5", id = "emulator1")

# emulation of model 2

m2 <- dgp(X2, Y2, depth = 2, name = "matern2.5", id = "emulator2")

struc <- data.frame(From_Emulator = c("Global", "emulator1"),

To_Emulator = c("emulator1", "emulator2"),

From_Output = c(1, 1),

To_Input = c(1, 1))

emulators <- list(m1, m2)

# construct the linked emulator for visual inspection

m_link <- lgp(struc, emulators, activate = FALSE)

# visual inspection

summary(m_link)

# build the linked emulator for prediction

m_link <- lgp(struc, emulators, activate = TRUE)

test_x <- seq(0, 1, length = 300)

m_link <- predict(m_link, x = test_x)

# OOS validation

validate_x <- sample(test_x, 20)

validate_y <- sapply(validate_x, f12)

plot(m_link, validate_x, validate_y, style = 2)

# write and read the constructed linked emulator

write(m_link, 'linked_emulator')

m_link <- read('linked_emulator')

## End(Not run)

Locate the next design point for a (D)GP emulator or a bundle of (D)GP emulators using MICE

Description

This function searches from a candidate set to locate the next design point(s) to be added to a (D)GP emulator or a bundle of (D)GP emulators using the Mutual Information for Computer Experiments (MICE), see the reference below.

Usage

mice(object, ...)

## S3 method for class 'gp'

mice(

object,

x_cand = NULL,

n_cand = 200,

batch_size = 1,

M = 50,

nugget_s = 1e-06,

workers = 1,

limits = NULL,

int = FALSE,

...

)

## S3 method for class 'dgp'

mice(

object,

x_cand = NULL,

n_cand = 200,

batch_size = 1,

M = 50,

nugget_s = 1e-06,

workers = 1,

limits = NULL,

int = FALSE,

aggregate = NULL,

...

)

## S3 method for class 'bundle'

mice(

object,

x_cand = NULL,

n_cand = 200,

batch_size = 1,

M = 50,

nugget_s = 1e-06,

workers = 1,

limits = NULL,

int = FALSE,

aggregate = NULL,

...

)

Arguments

object |

can be one of the following:

|

... |

any arguments (with names different from those of arguments used in |

x_cand |

a matrix (with each row being a design point and column being an input dimension) that gives a candidate set

from which the next design point(s) are determined. If |

n_cand |

an integer specifying the size of the candidate set to be generated for selecting the next design point(s).

This argument is used only when |

batch_size |

an integer that gives the number of design points to be chosen.

Defaults to |

M |

the size of the conditioning set for the Vecchia approximation in the criterion calculation. This argument is only used if the emulator |

nugget_s |

the value of the smoothing nugget term used by MICE. Defaults to |

workers |

the number of processes to be used for the criterion calculation. If set to |

limits |

a two-column matrix that gives the ranges of each input dimension, or a vector of length two if there is only one input dimension.

If a vector is provided, it will be converted to a two-column row matrix. The rows of the matrix correspond to input dimensions, and its

first and second columns correspond to the minimum and maximum values of the input dimensions. This

argument is only used when |

int |

a bool or a vector of bools that indicates if an input dimension is an integer type. If a single bool is given, it will be applied to

all input dimensions. If a vector is provided, it should have a length equal to the input dimensions and will be applied to individual

input dimensions. This argument is only used when |

aggregate |

an R function that aggregates scores of the MICE across different output dimensions (if

Set to |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

If

x_candis notNULL:When

objectis an instance of thegpclass, a vector of lengthbatch_sizeis returned, containing the positions (row numbers) of the next design points fromx_cand.When

objectis an instance of thedgpclass, a vector of lengthbatch_size * Dis returned, containing the positions (row numbers) of the next design points fromx_candto be added to the DGP emulator.-

Dis the number of output dimensions of the DGP emulator if no likelihood layer is included. For a DGP emulator with a

HeteroorNegBinlikelihood layer,D = 2.For a DGP emulator with a

Categoricallikelihood layer,D = 1for binary output orD = Kfor multi-class output withKclasses.

-

When

objectis an instance of thebundleclass, a matrix is returned withbatch_sizerows and a column for each emulator in the bundle, containing the positions (row numbers) of the next design points fromx_candfor individual emulators.

If

x_candisNULL:When

objectis an instance of thegpclass, a matrix withbatch_sizerows is returned, giving the next design points to be evaluated.When

objectis an instance of thedgpclass, a matrix withbatch_size * Drows is returned, where:-

Dis the number of output dimensions of the DGP emulator if no likelihood layer is included. For a DGP emulator with a

HeteroorNegBinlikelihood layer,D = 2.For a DGP emulator with a

Categoricallikelihood layer,D = 1for binary output orD = Kfor multi-class output withKclasses.

-

When

objectis an instance of thebundleclass, a list is returned with a length equal to the number of emulators in the bundle. Each element of the list is a matrix withbatch_sizerows, where each row represents a design point to be added to the corresponding emulator.

Note

The first column of the matrix supplied to the first argument of aggregate must correspond to the first output dimension of the DGP emulator

if object is an instance of the dgp class, and so on for subsequent columns and dimensions. If object is an instance of the bundle class,

the first column must correspond to the first emulator in the bundle, and so on for subsequent columns and emulators.

References

Beck, J., & Guillas, S. (2016). Sequential design with mutual information for computer experiments (MICE): emulation of a tsunami model. SIAM/ASA Journal on Uncertainty Quantification, 4(1), 739-766.

Examples

## Not run:

# load packages and the Python env

library(lhs)

library(dgpsi)

# construct a 1D non-stationary function

f <- function(x) {

sin(30*((2*x-1)/2-0.4)^5)*cos(20*((2*x-1)/2-0.4))

}

# generate the initial design

X <- maximinLHS(10,1)

Y <- f(X)

# training a 2-layered DGP emulator with the global connection off

m <- dgp(X, Y, connect = F)

# generate a candidate set

x_cand <- maximinLHS(200,1)

# locate the next design point using MICE

next_point <- mice(m, x_cand = x_cand)

X_new <- x_cand[next_point,,drop = F]

# obtain the corresponding output at the located design point

Y_new <- f(X_new)

# combine the new input-output pair to the existing data

X <- rbind(X, X_new)

Y <- rbind(Y, Y_new)

# update the DGP emulator with the new input and output data and refit

m <- update(m, X, Y, refit = TRUE)

# plot the LOO validation

plot(m)

## End(Not run)

Calculate the predictive negative log-likelihood

Description

This function computes the predictive negative log-likelihood from a DGP emulator with a likelihood layer.

Usage

nllik(object, x, y)

Arguments

object |

an instance of the |

x |

a matrix where each row is an input testing data point and each column is an input dimension. |

y |

a matrix with only one column where each row is a scalar-valued testing output data point. |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An updated object is returned with an additional slot named NLL that contains two elements.

The first one, named meanNLL, is a scalar that gives the average negative predicted log-likelihood

across all testing data points. The second one, named allNLL, is a vector that gives the negative predicted

log-likelihood for each testing data point.

Pack GP and DGP emulators into a bundle

Description

This function packs GP emulators and DGP emulators into a bundle class for

sequential designs if each emulator emulates one output dimension of the underlying simulator.

Usage

pack(..., id = NULL)

Arguments

... |

a sequence or a list of emulators produced by |

id |

an ID to be assigned to the bundle emulator. If an ID is not provided (i.e., |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An S3 class named bundle to be used by design() for sequential designs. It has:

a slot called

idthat is assigned through theidargument.-

N slots named

emulator1,...,emulatorN, each of which contains a GP or DGP emulator, where N is the number of emulators that are provided to the function. a slot called

datawhich contains two elementsXandY.Xcontains N matrices namedemulator1,...,emulatorNthat are training input data for different emulators.Ycontains N single-column matrices namedemulator1,...,emulatorNthat are training output data for different emulators.

Examples

## Not run:

# load packages

library(lhs)

library(dgpsi)

# construct a function with a two-dimensional output

f <- function(x) {

y1 = sin(30*((2*x-1)/2-0.4)^5)*cos(20*((2*x-1)/2-0.4))

y2 = 1/3*sin(2*(2*x - 1))+2/3*exp(-30*(2*(2*x-1))^2)+1/3

return(cbind(y1,y2))

}

# generate the initial design

X <- maximinLHS(10,1)

Y <- f(X)

# generate the validation data

validate_x <- maximinLHS(30,1)

validate_y <- f(validate_x)

# training a 2-layered DGP emulator with respect to each output with the global connection off

m1 <- dgp(X, Y[,1], connect = F)

m2 <- dgp(X, Y[,2], connect = F)

# specify the range of the input dimension

lim <- c(0, 1)

# pack emulators to form an emulator bundle

m <- pack(m1, m2)

# 1st wave of the sequential design with 10 iterations and the target RMSE of 0.01

m <- design(m, N = 10, limits = lim, f = f, x_test = validate_x, y_test = validate_y, target = 0.01)

# 2rd wave of the sequential design with additional 10 iterations and the same target

m <- design(m, N = 10, limits = lim, f = f, x_test = validate_x, y_test = validate_y, target = 0.01)

# draw sequential designs of the two packed emulators

draw(m, type = 'design')

# inspect the traces of RMSEs of the two packed emulators

draw(m, type = 'rmse')

# write and read the constructed emulator bundle

write(m, 'bundle_dgp')

m <- read('bundle_dgp')

# unpack the bundle into individual emulators

m_unpacked <- unpack(m)

# plot OOS validations of individual emulators

plot(m_unpacked[[1]], x_test = validate_x, y_test = validate_y[,1])

plot(m_unpacked[[2]], x_test = validate_x, y_test = validate_y[,2])

## End(Not run)

Validation plots of a constructed GP, DGP, or linked (D)GP emulator

Description

This function draws validation plots of a GP, DGP, or linked (D)GP emulator.

Usage

## S3 method for class 'dgp'

plot(

x,

x_test = NULL,

y_test = NULL,

dim = NULL,

method = "mean_var",

sample_size = 50,

style = 1,

min_max = TRUE,

normalize = TRUE,

color = "turbo",

type = "points",

verb = TRUE,

M = 50,

force = FALSE,

cores = 1,

...

)

## S3 method for class 'lgp'

plot(

x,

x_test = NULL,

y_test = NULL,

dim = NULL,

method = "mean_var",

sample_size = 50,

style = 1,

min_max = TRUE,

color = "turbo",

type = "points",

M = 50,

verb = TRUE,

force = FALSE,

cores = 1,

...

)

## S3 method for class 'gp'

plot(

x,

x_test = NULL,

y_test = NULL,

dim = NULL,

method = "mean_var",

sample_size = 50,

style = 1,

min_max = TRUE,

color = "turbo",

type = "points",

verb = TRUE,

M = 50,

force = FALSE,

cores = 1,

...

)

Arguments

x |

can be one of the following emulator classes:

|

x_test |

same as that of |

y_test |

same as that of |

dim |

if

This argument is only used when |

method |

same as that of |

sample_size |

same as that of |

style |

either |

min_max |

a bool indicating if min-max normalization will be used to scale the testing output, RMSE, predictive mean and std from the

emulator. Defaults to |

normalize |

|

color |

a character string indicating the color map to use when

Defaults to |

type |

either |

verb |

a bool indicating if trace information on plotting will be printed during execution.

Defaults to |

M |

same as that of |

force |

same as that of |

cores |

same as that of |

... |

N/A. |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

A patchwork object.

Note

-

plot()callsvalidate()internally to obtain validation results for plotting. However,plot()will not export the emulator object with validation results. Instead, it only returns the plotting object. For small-scale validations (i.e., small training or testing data points), direct execution ofplot()works well. However, for moderate- to large-scale validation, it is recommended to first runvalidate()to obtain and store validation results in the emulator object, and then supply the object toplot().plot()checks the object'slooandoosslots prior to callingvalidate()and will not perform further calculation if the required information is already stored. -

plot()will only use stored OOS validation ifx_testandy_testare identical to those used byvalidate()to produce the data contained in the object'soosslot, otherwiseplot()will re-evaluate OOS validation before plotting. The returned patchwork::patchwork object contains the ggplot2::ggplot2 objects. One can modify the included individual ggplots by accessing them with double-bracket indexing. See https://patchwork.data-imaginist.com/ for further information.

Examples

## Not run:

# See gp(), dgp(), or lgp() for an example.

## End(Not run)

Prediction from GP, DGP, or linked (D)GP emulators

Description

This function implements prediction from GP, DGP, or linked (D)GP emulators.

Usage

## S3 method for class 'dgp'

predict(

object,

x,

method = "mean_var",

full_layer = FALSE,

sample_size = 50,

M = 50,

cores = 1,

chunks = NULL,

...

)

## S3 method for class 'lgp'

predict(

object,

x,

method = "mean_var",

full_layer = FALSE,

sample_size = 50,

M = 50,

cores = 1,

chunks = NULL,

...

)

## S3 method for class 'gp'

predict(

object,

x,

method = "mean_var",

sample_size = 50,

M = 50,

cores = 1,

chunks = NULL,

...

)

Arguments

object |

an instance of the |

x |

the testing input data:

|

method |

|

full_layer |

a bool indicating whether to output the predictions of all layers. Defaults to |

sample_size |

the number of samples to draw for each given imputation if |

M |

the size of the conditioning set for the Vecchia approximation in the emulator prediction. Defaults to |

cores |

the number of processes to be used for prediction. If set to |

chunks |

the number of chunks that the testing input matrix |

... |

N/A. |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

If

objectis an instance of thegpclass:if

method = "mean_var": an updatedobjectis returned with an additional slot calledresultsthat contains two matrices namedmeanfor the predictive means andvarfor the predictive variances. Each matrix has only one column with its rows corresponding to testing positions (i.e., rows ofx).if

method = "sampling": an updatedobjectis returned with an additional slot calledresultsthat contains a matrix whose rows correspond to testing positions and columns correspond tosample_sizenumber of samples drawn from the predictive distribution of GP.

-

If

objectis an instance of thedgpclass:if

method = "mean_var"andfull_layer = FALSE: an updatedobjectis returned with an additional slot calledresultsthat contains two matrices namedmeanfor the predictive means andvarfor the predictive variances respectively. Each matrix has its rows corresponding to testing positions and columns corresponding to DGP global output dimensions (i.e., the number of GP/likelihood nodes in the final layer). If the likelihood node is categorical, the matrices contain the predictive means and variances of the class probabilities, with columns corresponding to different classes.if

method = "mean_var"andfull_layer = TRUE: an updatedobjectis returned with an additional slot calledresultsthat contains two sub-lists namedmeanfor the predictive means andvarfor the predictive variances respectively. Each sub-list contains L (i.e., the number of layers) matrices namedlayer1, layer2,..., layerL. Each matrix has its rows corresponding to testing positions and columns corresponding to output dimensions (i.e., the number of GP/likelihood nodes from the associated layer). If the likelihood node is categorical, the matrices namedlayerLin bothmeanandvarcontain the predictive means and variances of the class probabilities, respectively, with columns corresponding to different classes.if

method = "sampling"andfull_layer = FALSE: an updatedobjectis returned with an additional slot calledresultsthat contains D (i.e., the number of GP/likelihood nodes in the final layer) matrices namedoutput1, output2,..., outputD. If the likelihood node in the final layer is categorical,resultscontains D matrices (where D is the number of classes) of sampled class probabilities, each named according to its corresponding class label. Each matrix inresultshas its rows corresponding to testing positions and columns corresponding to samples of size:B * sample_size, whereBis the number of imputations specified indgp().if

method = "sampling"andfull_layer = TRUE: an updatedobjectis returned with an additional slot calledresultsthat contains L (i.e., the number of layers) sub-lists namedlayer1, layer2,..., layerL. Each sub-list represents samples drawn from the GP/likelihood nodes in the corresponding layer, and contains D (i.e., the number of GP/likelihood nodes in the corresponding layer) matrices namedoutput1, output2,..., outputD. If the likelihood node in the final layer is categorical,layerLcontains D matrices (where D is the number of classes) of sampled class probabilities, each named according to its corresponding class label. Each matrix has its rows corresponding to testing positions and columns corresponding to samples of size:B * sample_size, whereBis the number of imputations specified indgp().

If

objectis an instance of thelgpclass:if

method = "mean_var"andfull_layer = FALSE: an updatedobjectis returned with an additional slot calledresultsthat contains two sub-lists namedmeanfor the predictive means andvarfor the predictive variances respectively. Each sub-list contains K (same number of emulators in the final layer of the system) matrices named using theIDs of the corresponding emulators in the final layer. Each matrix has rows corresponding to global testing positions and columns corresponding to output dimensions of the associated emulator in the final layer.if

method = "mean_var"andfull_layer = TRUE: an updatedobjectis returned with an additional slot calledresultsthat contains two sub-lists namedmeanfor the predictive means andvarfor the predictive variances respectively. Each sub-list contains L (i.e., the number of layers in the emulated system) components namedlayer1, layer2,..., layerL. Each component represents a layer and contains K (same number of emulators in the corresponding layer of the system) matrices named using theIDs of the corresponding emulators in that layer. Each matrix has its rows corresponding to global testing positions and columns corresponding to output dimensions of the associated GP/DGP emulator in the corresponding layer.if

method = "sampling"andfull_layer = FALSE: an updatedobjectis returned with an additional slot calledresultsthat contains K (same number of emulators in the final layer of the system) sub-lists named using theIDs of the corresponding emulators in the final layer. Each sub-list contains D matrices, namedoutput1, output2,..., outputD, that correspond to the output dimensions of the GP/DGP emulator. Each matrix has rows corresponding to testing positions and columns corresponding to samples of size:B * sample_size, whereBis the number of imputations specified inlgp().if

method = "sampling"andfull_layer = TRUE: an updatedobjectis returned with an additional slot calledresultsthat contains L (i.e., the number of layers of the emulated system) sub-lists namedlayer1, layer2,..., layerL. Each sub-list represents a layer and contains K (same number of emulators in the corresponding layer of the system) components named using theIDs of the corresponding emulators in that layer. Each component contains D matrices, namedoutput1, output2,..., outputD, that correspond to the output dimensions of the GP/DGP emulator. Each matrix has its rows corresponding to testing positions and columns corresponding to samples of size:B * sample_size, whereBis the number of imputations specified inlgp().

The results slot will also include:

the value of

M, which represents the size of the conditioning set for the Vecchia approximation, if used, in the emulator prediction.the value of

sample_sizeifmethod = "sampling".

Examples

## Not run:

# See gp(), dgp(), or lgp() for an example.

## End(Not run)

Static pruning of a DGP emulator

Description

This function implements static pruning for a DGP emulator.

Usage

prune(object, control = list(), verb = TRUE)

Arguments

object |

an instance of the |

control |

a list that can supply the following two components to control static pruning of the DGP emulator:

|

verb |

a bool indicating if trace information will be printed during the function execution. Defaults to |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An updated object that could be an instance of gp, dgp, or bundle (of GP emulators) class.

Note

The function requires a DGP emulator that has been trained with a dataset comprising a minimum size equal to

min_sizeincontrol. If the training dataset size is smaller than this, it is recommended that the design of the DGP emulator is enriched and its structure pruned dynamically using thedesign()function. Depending on the design of the DGP emulator, static pruning may not be accurate. It is thus recommended that dynamic pruning is implemented as a part of a sequential design viadesign().The following slots:

-

looandooscreated byvalidate(); and -

resultscreated bypredict();

in

objectwill be removed and not contained in the returned object.-

Examples

## Not run:

# load the package and the Python env

library(dgpsi)

# construct the borehole function over a hypercube

f <- function(x){

x[,1] <- (0.15 - 0.5) * x[,1] + 0.5

x[,2] <- exp((log(50000) - log(100)) * x[,2] + log(100))

x[,3] <- (115600 - 63070) *x[,3] + 63070

x[,4] <- (1110 - 990) * x[,4] + 990

x[,5] <- (116 - 63.1) * x[,5] + 63.1

x[,6] <- (820 - 700) * x[,6] + 700

x[,7] <- (1680 - 1120) * x[,7] + 1120

x[,8] <- (12045 - 9855) * x[,8] + 9855

y <- apply(x, 1, RobustGaSP::borehole)

}

# set a random seed

set_seed(999)

# generate training data

X <- maximinLHS(80, 8)

Y <- f(X)

# generate validation data

validate_x <- maximinLHS(500, 8)

validate_y <- f(validate_x)

# training a DGP emulator with anisotropic squared exponential kernels

m <- dgp(X, Y, share = F)

# OOS validation of the DGP emulator

plot(m, validate_x, validate_y)

# prune the emulator until no more GP nodes are removable

m <- prune(m)

# OOS validation of the resulting emulator

plot(m, validate_x, validate_y)

## End(Not run)

Load the stored emulator

Description

This function loads the .pkl file that stores the emulator.

Usage

read(pkl_file)

Arguments

pkl_file |

the path to and the name of the |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

The S3 class of a GP emulator, a DGP emulator, a linked (D)GP emulator, or a bundle of (D)GP emulators.

Examples

## Not run:

# See gp(), dgp(), lgp(), or pack() for an example.

## End(Not run)

Serialize the constructed emulator

Description

This function serializes the constructed emulator.

Usage

serialize(object, light = TRUE)

Arguments

object |

an instance of the S3 class |

light |

a bool indicating if a light version of the constructed emulator (that requires a small storage) will be serialized.

Defaults to |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

A serialized version of object.

Note

Since the constructed emulators are 'python' objects, they cannot be directly exported to other R processes for parallel

processing. This function provides a solution by converting the emulators into serialized objects, which can be restored

using deserialize() for multi-process parallel implementation.

Examples

## Not run:

library(parallel)

library(dgpsi)

# model

f <- function(x) {

(sin(7.5*x)+1)/2

}

# training data

X <- seq(0, 1, length = 10)

Y <- sapply(X, f)

# train a DGP emulator

m <- dgp(X, Y, name = "matern2.5")

# testing input data

X_dgp <- seq(0, 1, length = 100)

# serialize the DGP emulator

m_serialized <- serialize(m)

# create a cluster with 8 workers for parallel predictions

cl <- makeCluster(8)

# export objects to the cluster

clusterExport(cl, varlist = c("m_serialized", "X_dgp"))

# initialize deserialized object on each worker

res <- clusterEvalQ(cl, {

library(dgpsi)

assign("m_deserialized", deserialize(m_serialized), envir = .GlobalEnv)

})

# perform parallel predictions

results <- parLapply(cl, 1:length(X_dgp), function(i) {

mean_i <- predict(m_deserialized, X_dgp[i])$results$mean

})

# reset the cluster

stopCluster(cl)

# combine mean predictions

pred_mean <- do.call(rbind, results)

## End(Not run)

Set Emulator ID

Description

This function assigns a unique identifier to an emulator.

Usage

set_id(object, id)

Arguments

object |

an emulator object to which the ID will be assigned. |

id |

a unique identifier for the emulator as either a numeric or character string. Ensure this ID does not conflict with other emulator IDs, especially when used in linked emulations. |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

The updated object, with the assigned ID stored in its id slot.

Examples

## Not run:

# See lgp() for an example.

## End(Not run)

Reset number of imputations for a DGP emulator

Description

This function resets the number of imputations for prediction from a DGP emulator.

Usage

set_imp(object, B = 5)

Arguments

object |

an instance of the S3 class |

B |

the number of imputations to produce predictions from |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An updated object with the information of B incorporated.

Note

This function is useful when a DGP emulator has been trained and one wants to make faster predictions by decreasing the number of imputations without rebuilding the emulator.

The following slots:

-

looandooscreated byvalidate(); and -

resultscreated bypredict()inobjectwill be removed and not contained in the returned object.

-

Examples

## Not run:

# See design() for an example.

## End(Not run)

Random seed generator

Description

This function initializes a random number generator that sets the random seed in both R and Python to ensure reproducible results from the package.

Usage

set_seed(seed)

Arguments

seed |

a single integer value. |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

No return value.

Examples

## Not run:

# See dgp() for an example.

## End(Not run)

Set the number of threads

Description

This function sets the number of threads for parallel computations involved in the package.

Usage

set_thread_num(num)

Arguments

num |

the number of threads. If it is greater than the maximum number of threads available, the number of threads will be set to the maximum value. |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

No return value.

Add or remove the Vecchia approximation

Description

This function adds or removes the Vecchia approximation from a GP, DGP or linked (D)GP emulator

constructed by gp(), dgp() or lgp().

Usage

set_vecchia(object, vecchia = TRUE, M = 25, ord = NULL)

Arguments

object |

an instance of the S3 class |

vecchia |

a bool to indicate the addition or removal of the Vecchia approximation:

Defaults to |

M |

the size of the conditioning set for the Vecchia approximation in the (D)GP emulator training. Defaults to |

ord |

an R function that returns the ordering of the input to the (D)GP emulator for the Vecchia approximation. The function must satisfy the following basic rules:

If |

Details

See further examples and tutorials at https://mingdeyu.github.io/dgpsi-R/.

Value

An updated object with the Vecchia approximation either added or removed.

Note